Serverless AI Infrastructure Going into 2026: Sandboxes, GPUs, and More

At Koyeb, we are building high-performance serverless infrastrcture for AI. Deploy your workloads on serverless GPUs, next-generation AI accelerators, and CPUs. Our platform runs fully isolated, secure microVMs on bare-metal servers around the world with autoscaling, scale-to-zero, and cold starts as low as 250ms.

2026 is shaping up to be even bigger than 2025 for the AI stack: AI agents, AI-generated code, and multi-modal models are changing how all applications are built and deployed. The AI stack becomes a part of all stacks in the same way that cloud became essential.

Just like everyone building in AI, 2025 was a busy year for us. As we saw more and more teams move from PoCs and demos to production, we focused on building the high-performance serverless infrastructure that makes deploying and scaling AI reliable in the real world. We shipped a lot of features and improvements designed to make your AI deployments experience faster, smoother, and more cost effective:

- Koyeb Sandboxes

- Light Sleep Scale-to-Zero

- Serverless GPU Price Drop

- Koyeb MCP Server

- Tenstorrent Cloud Instances

- New AI Models Available in One-Click Catalog

- TCP Proxy to Expose TCP Ports Publicly

- Faster Deployments

What’s more, we partnered with leading companies teams shaping the future of cloud and AI infrastructure, organized and participated in over 50 amazing community events, and published a bunch of helpful resources to help you develop and deploy AI apps.

In case you missed it, here’s a comprehensive rundown of everything that went live in 2025 to help you build, deploy, and scale your AI workloads on serverless infrastructure.

Koyeb Sandboxes Go Live

We launched Koyeb Sandboxes after working closely with teams running AI-generated code at massive scale on Koyeb. Those teams needed to spin up thousands of workloads every day securely, cost-effectively, and without building custom infrastructure abstractions themselves.

Koyeb Sandboxes package those requirements into a single, easy-to-use experience: ephemeral, fully isolated environments for AI agents, code generation, and more.

You can get started with Koyeb Sandboxes using our Python and JavaScript SDKs. Spin up a sandboxed environment whenever you (or your AI agent) need one, run commands, manage files, expose ports, and shut it down, all without worrying about the underlying infrastructure.

Here are helpful links to get started with Koyeb Sandboxes:

- Get up and running with the Koyeb Sandbox Quickstart.

- Understand the Sandbox lifecycle.

- Learn how to take actions on files and folders.

- Find out how to run commands in your sandboxes.

- Check out our tutorials on secure code execution using OpenAI Codex and the Claude Agent SDK with Koyeb Sandboxes.

Light Sleep Scale-to-Zero Enabled

On the journey to provide the true serverless experience, light sleep scale-to-zero was a major milestone.

Bringing it to life required reworking core parts of the platform, from VM snapshotting to kernel-level networking. Thanks to eBPF isolation, snapshots, and other behind-the-scenes engineering, VMs can now wake from an idle state in as fast as 250ms.

Read the full story of how we built light sleep to enable an even faster version of scale-to-zero.

Released Koyeb MCP Server & Deploying Your Own Remote MCP Server

2025 was a breakthrough year for MCP! We launched the Koyeb MCP Server, letting you interact with your Koyeb resources using natural language.

We also released resources to help you deploy your own MCP servers remotely for low-latency and reliable deployments.

Learn how to deploy your own MCP server on Koyeb's serverless infrastructure for low-latency and reliable deployments.

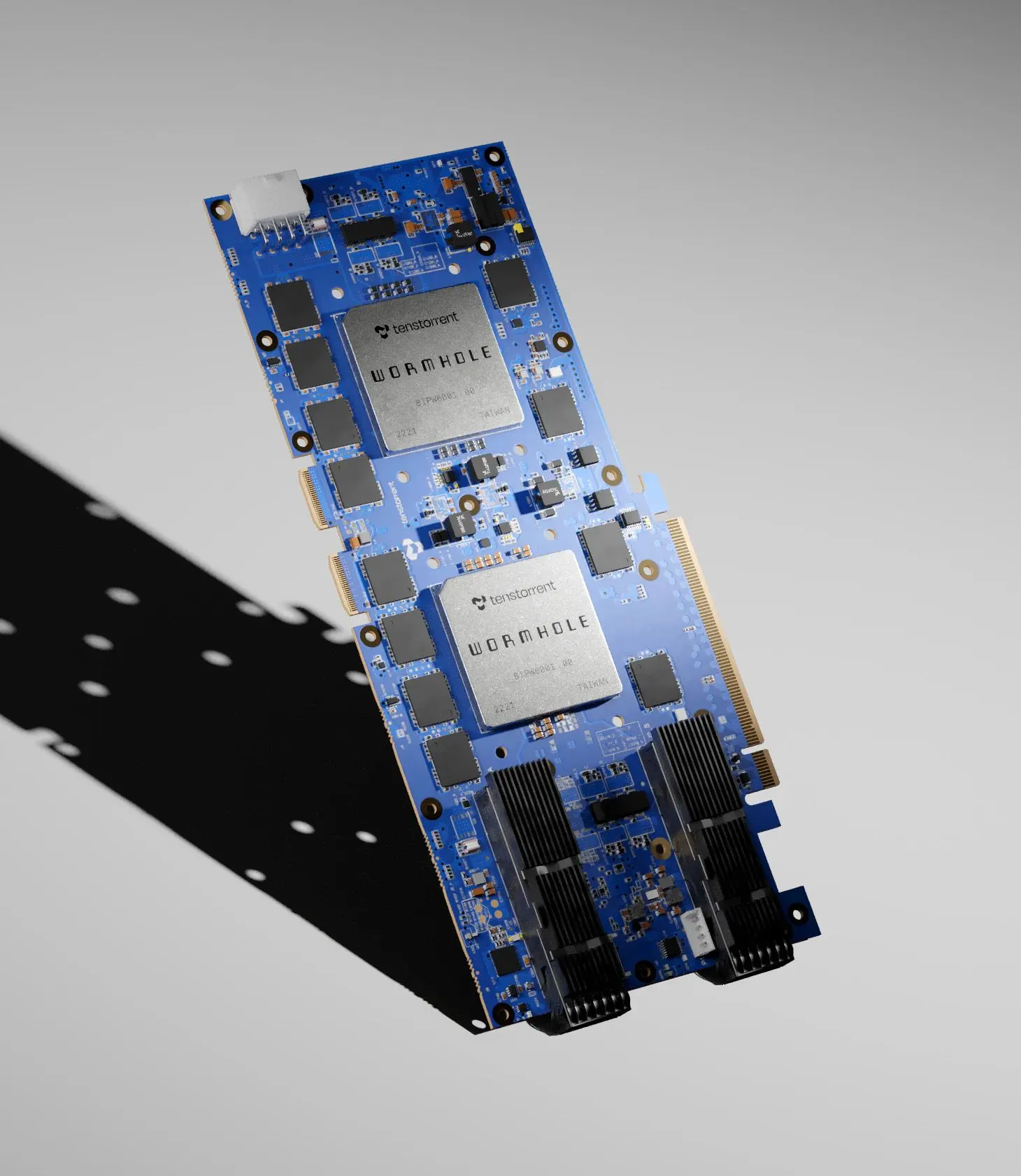

Introduced Tenstorrent Cloud Instances

As you may already know, we're committed to bringing alternative accelerators to market to foster innovation in the AI infrastructure space. Our platform is hardware agnostic, which means we can provide the seamless Koyeb deployment experience anywhere.

Starting last year, we added Tenstorrent Cloud Instances to provide on-demand access to Tenstorrent's Tensix Processor and open-source TT-Metalium SDK.

- TT-N300S: With one n300s, this instance has 24GB of GDDR6, 192MB of SRAM, provides up to 466 FP8 TFLOPS, and comes with 64GB of RAM with 4 vCPU.

- TT-Loudbox: With 4x n300s meshed together, this instance has 96GB of GDDR6, 768MB of SRAM, provides up to 1864 FP8 TFLOPS, and comes with 256GB of RAM with 16 vCPU.

These new Tenstorrent instances come with all the native features of the Koyeb platform to bring developers the fastest way to build AI models and run inference workloads in on-demand environments, with zero infrastructure management.

New Models and AI Starter Apps in Koyeb's One-Click Deploy Catalog

Over a hundred ready-to-use AI models, starter apps, and templates are now a click away. Perfect for inference, fine-tuning, or getting started with adding AI to your stack.

Discover SOTA AI models from Mistral AI, DeepSeek, Black Forest Labs, Google DeepMind, and more, and more in our One-Click Deploy Catalog.

All deployments run on production-grade serverless GPUs, with built-in autoscaling and scale-to-zero, so you can move from experiments to production. Whether you’re serving models for inference or fine-tuning, Koyeb handles capacity, scaling, performance, and reliability, so you can focus on shipping AI, not managing infrastructure.

Serverless GPU Price Drop

Running your AI workloads on high-performance serverless GPUs is easier than ever thanks to our price drops on A100, H100, and L40 GPUs.

AI infrastructure is expensive, and optimizing costs can change what experiments you run. Lower prices mean you can iterate faster, run bigger models, or just try ideas that previously felt “too expensive.”

| Serverless GPU Instance | Price Per Hour | Price Reduction | VRAM | vCPU | RAM |

|---|---|---|---|---|---|

| L40S | $1.20/hour | 23% | 48 GB | 15 | 64 GB |

| A100 | $1.60/hour | 20% | 80 GB | 15 | 180 GB |

| H100 | $2.50/hour | 24% | 80 GB | 15 | 180 GB |

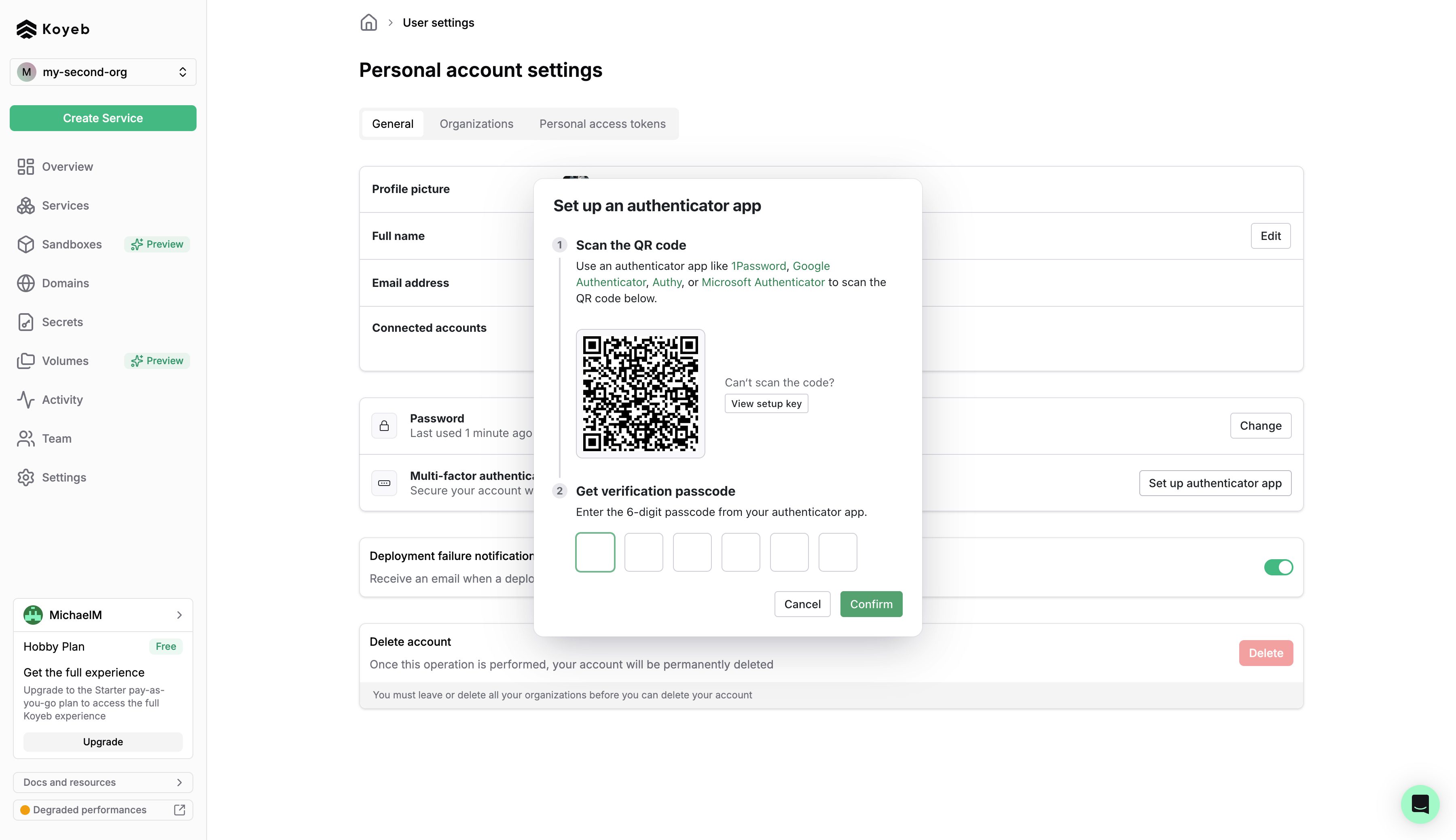

New Authentication Features

To support more advanced features and offer a smoother sign-in experience, we’ve introduced a fully redesigned authentication system with robust security features, including 2FA, MFA (Multi-Factor Authentication), and passkeys.

Learn more about these new features and how to get started by reading the full announcement.

Announced TCP Proxy

Up until last year, services running on Koyeb could only be publicly exposed via HTTP, HTTP/2, WebSocket, and gRPC protocols.

In 2025, we made it easy to expose TCP ports publicly. Useful for debugging, testing multi-service systems, or connecting local tools to a cloud environment without all the networking headaches.

Whether you’re running:

- Databases like MySQL, MongoDB, Redis

- Remote access protocols like SSH, RDP, VNC

- IoT protocols like MQTT, AMQP

You can now reach them over the public Internet easily. No more need for complex proxy setups or custom routing rules.

Run and scale TCP-based applications on high-performance serverless infrastructure.

Faster Deployments, Faster Time to Production

In 2025, we significantly upgraded Koyeb's serverless engine to speed up deployments and scaling.

- Up to 60% faster time to healthy deployments

- 2x faster startup time for GPU-backed services

- Faster Koyeb Sandbox boot times

- Smarter wake ups during new deployments

- Improved out of memory detection

Together, these improvements shorten the path to production while increasing reliability for real-world AI workloads.

Launched the Koyeb Partner Hub

Since day 1, we've teamed up with leading companies to drive the next wave of global AI infrastructure. Our Partner Hub proudly showcases these incredible collaborations.

We have a full ecosystem of partners, here are a few of them:

- ⚙️ Hardware partners: Tenstorrent

- ☁️ Cloud platform partners: Vultr and MongoDB

- 🧑💻 Technology alliance partners: Ollama, Tailscale, Pruna AI

If you're interested in becoming a Koyeb partner, fill out our short form and our partnerships team will connect with next steps.

Community & Events

In 2025, we brought the global AI engineering community together through 20+ events across SF, Paris, and NYC including meetups, hackathons, dinners, happy hours, and our first conference!

A major milestone was hosting the first AI Engineer Conference in Paris, where we gathered top AI builders, founders, and leaders to share how AI is being built and deployed in production today.

- 🎥 Catch past Koyeb talks

- 📅 Join us at an upcoming event near you

- 🙌 Partner with us to co-host or collaborate on an event

Docs, Tutorials, Videos, & Other Resources

Throughout the year, we published a bunch of helpful resources to help you develop and deploy AI apps:

- Tutorials for building with OpenAI Apps SDK and Codex, Anthropic's Claude Agent SDK, Unsloth, and more

- Docs to get started with Koyeb Sandboxes, expose TCP ports, and learn how to enable 2FA, MFA and Passkeys

- Blog on new features like the JS SDK for Sandboxes, engineering deep dives like chasing a latency spike across a globally distributed stack, and customer cases like eToro, Scenario, and Anyshift.

- Videos covering how to build AI apps on Koyeb and educational content like "Why run AI-generated code in a sandbox?"

Check out the Koyeb Community for our weekly changelog updates, to ask questions, and share your projects.

Coming Next in 2026

2026 is shaping up to be another big year for AI. AI-generated code, coding agents, and multi-modal models are quickly moving from experiments to production. The infrastructure powering them needs to keep up.

At Koyeb, we're building high-performance serverless infrastructure so teams can focus on building and deploying AI without worrying about the underlying infrastructure. From Sandboxes designed to safely execute AI-generated code, to serverless GPUs for inference and fine-tuning, to scaling primitives built for real-world workloads, everything we shipped in 2025 was about one thing: making AI in production fast, reliable, and effortless.

- Best-in-Class Performance: Leverage cutting-edge GPUs, next-gen AI accelerators, and CPUs that automatically scale to handle your workloads.

- Lower Costs: Pay only for what you use, with reduced GPU costs and scale-to-zero efficiency.

- Fast, Reliable and Effortless Deployments: From new one-click deployments to sandboxed environments, we’re removing friction so you can focus on building and deploying your ideas.

As always, your feedback drives us. Let us know what features you want to see on the platform with our feature request platform.

There are big updates coming ahead, so stay tuned! Until then, we’re excited to see how you’ll use Koyeb to build and scale your applications.

If this work sounds fun to you, check out our available engineering roles!