Koyeb Serverless GPUs: Slashing Prices on A100, H100, and L40S by up To 24%

At Koyeb, we provide high-performance serverless infrastructure to run and scale dedicated GPU containers in production with zero configuration required. No ops, no servers, no infrastructure management. We automatically scale GPUs when needed, and down to zero when idle.

GPUs are expensive. Everyone knows it. We believe high-performance AI infrastructure should be accessible to everyone from teams running hundreds of GPUs who need a cost-effective way to scale based on different scaling criteria while keeping costs under control to AI builders who want occasional capacity to experiment, deploy, and fine-tune models.

Today, we’re excited to share that we’re dropping our prices by up to 24% on NVIDIA’s L40S, A100, and H100 GPUs, making high-performance AI workloads even more accessible on Koyeb.

Making GPU Power Accessible to Every Builder

GPUs are one of the biggest infrastructure costs in AI, often out of reach for many teams. At Koyeb, we’re changing that by making high-performance compute accessible to every builder and organization.

Our serverless GPU platform scales from zero to hundreds of replicas automatically, so you only pay for what you use. No idle costs, no infrastructure management.

Now, we’re going further. With reduced prices across our most popular NVIDIA GPUs, it’s easier than ever to experiment, fine-tune, and deploy AI workloads at scale, faster, more efficiently, and without fear of the bill.

Up to 32% More Compute on L40S, A100, and H100 GPUs

TL;DR: Lower prices. More compute. Same serverless experience.

| Serverless GPU Instance | Price Per Hour | Price Reduction | VRAM | vCPU | RAM |

|---|---|---|---|---|---|

| L40S | $1.20/hour | 23% | 48 GB | 15 | 64 GB |

| A100 | $1.60/hour | 20% | 80 GB | 15 | 180 GB |

| H100 | $2.50/hour | 24% | 80 GB | 15 | 180 GB |

All GPU instances are billed by the second.

Run your AI workloads on high-performance serverless GPUs. Enjoy native autoscaling and Scale-to-Zero.

More than Just a Price Drop, an Efficient and Scalable Serverless Platform

With this change, you can stretch your computing budget while keeping all the benefits of serverless:

- Deploy containers from any registry using the Koyeb API, CLI, or control panel.

- Scale-to-Zero with reactive autoscaling based on requests per second, concurrent connections, or P95 response time.

- Pay only for what you use, per second.

- Dedicated GPU performance without managing underlying infrastructure.

- Built-in observability, metrics, and more.

- One unified platform for all your AI workloads to deploy and scale across GPUs, CPUs, and accelerators.

Build fast. Experiment more. Scale on-demand.

Multi-GPU A100 and H100 Configurations

The price drop applies to multi-GPU configurations, including 2×, 4×, and 8× A100 and H100 setups. Whether you’re running inference or fine-tuning ML models, you can now leverage multi-GPU configurations at a fraction of the previous cost.

Combined with Scale-to-Zero and autoscaling, these lower prices on H100 and A100 serverless GPUs mean you get huge gains in efficiency and more compute for less.

| Serverless GPU Instance | Price Per Hour | VRAM | vCPU | RAM |

|---|---|---|---|---|

| A100 | $1.60/hour | 80 GB | 15 | 180 GB |

| 2× A100 | $3.20/hour | 160 GB | 30 | 360 GB |

| 4× A100 | $6.40/hour | 320 GB | 60 | 720 GB |

| 8× A100 | $12.80/hour | 640 GB | 120 | 1.44 TB |

| H100 | $2.50/hour | 80 GB | 15 | 180 GB |

| 2× H100 | $5.00/hour | 160 GB | 30 | 360 GB |

| 4× H100 | $10.00/hour | 320 GB | 60 | 720 GB |

| 8× H100 | $20.00/hour | 640 GB | 120 | 1.44 TB |

Get Started Today

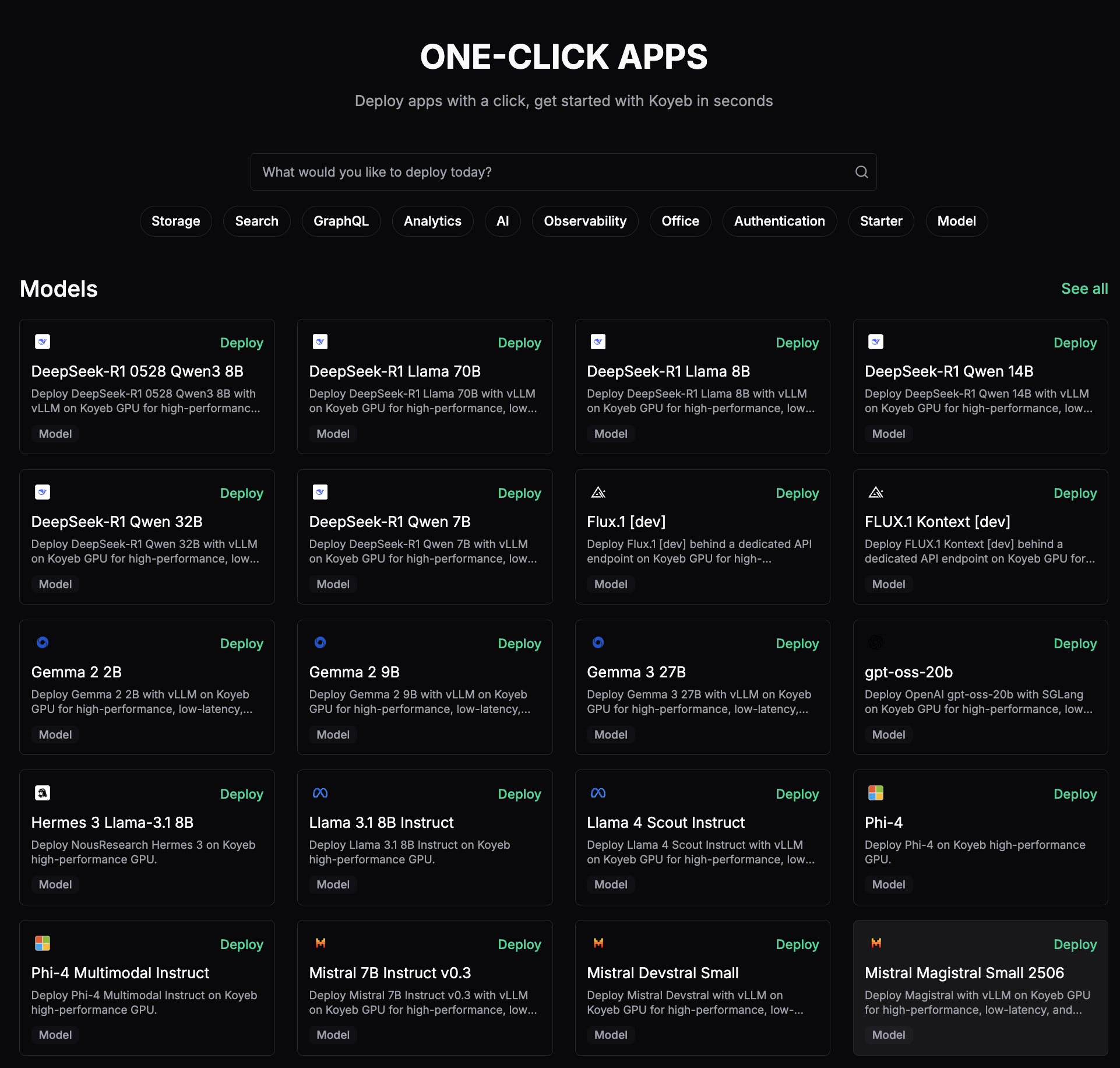

Getting started with GPU-powered AI on Koyeb is effortless. With 1-click deployments for popular models and frameworks, you can launch and scale GPU workloads in minutes.

As of today, Koyeb Serverless GPUs are available at up to 24% lower prices — so you can build, experiment, and scale to zero without fear of the bill.