High-performance Infrastructure

for

Deploy intensive applications across GPUs, CPUs, and Accelerators in minutes - scale in 50+ locations

Trusted by the most ambitious teams

Next-generation cloud experience

Accelerated infrastructure

Serverless containers

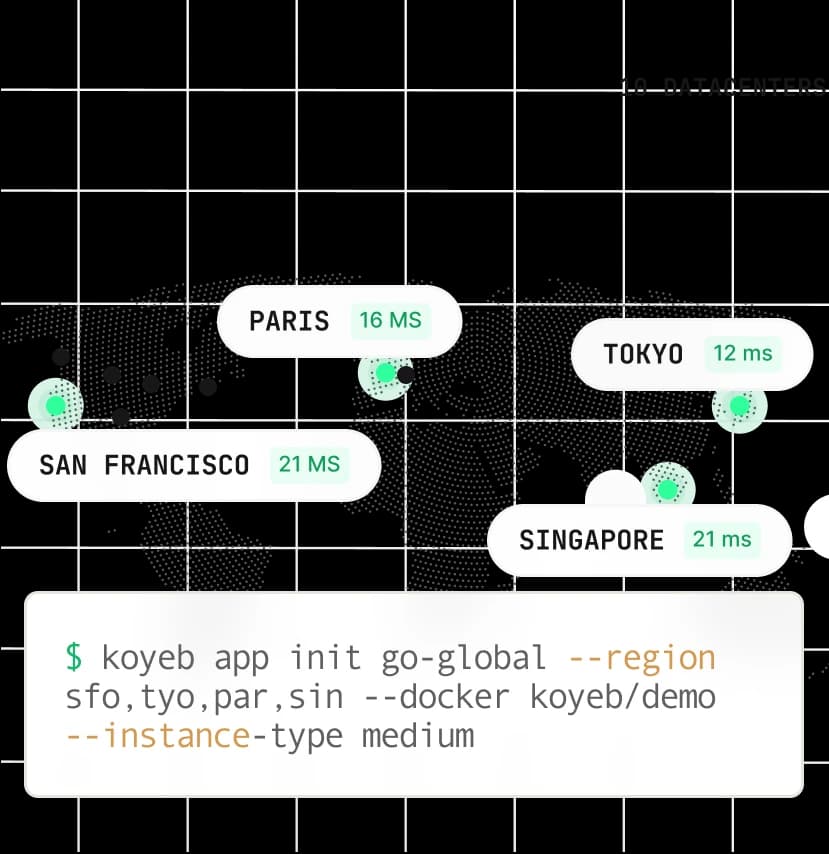

Available globally and locally

Build and deploy anything

The serverless runtime for

Deploy any application seamlessly with native support for popular languages and Docker containers, without any modification.

Deploy now

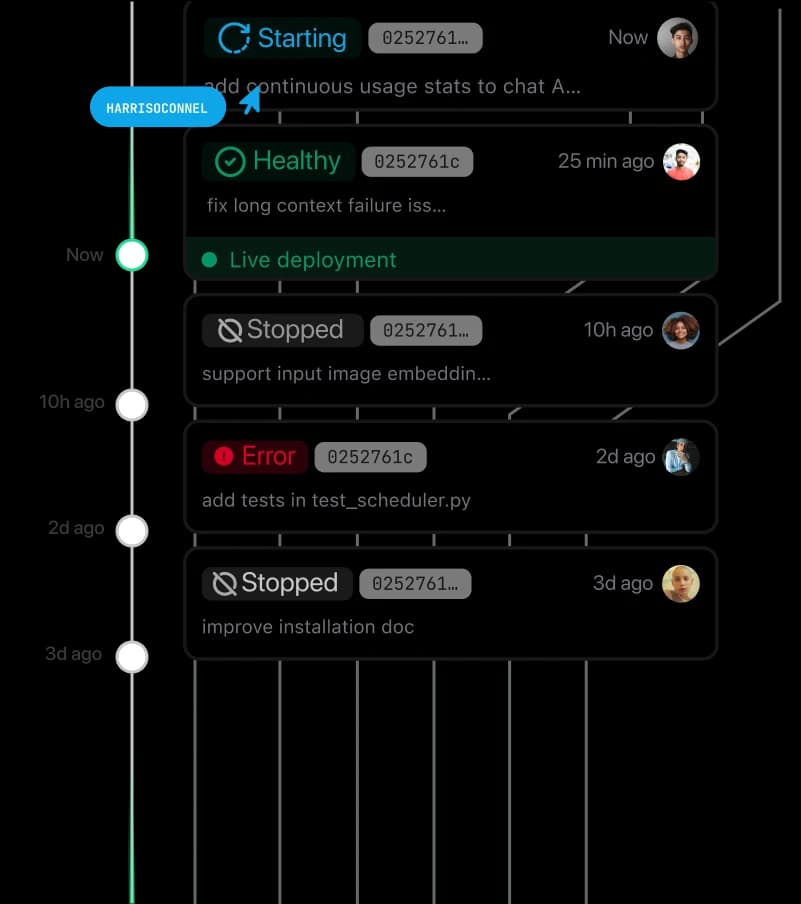

Experience collaborative development on high-performance cloud infrastructure with a simple Git push. Build together and push to prod with confidence.

Start nowDeploy in production with one-click apps

Enterprise-Ready.

Koyeb is a powerful platform with security built-in at all layers.

Any Cloud

Any Cloud Any Hardware

Any Hardware

We use GPUs for training and inference, and Koyeb streamlines our workload deployment, optimizing efficiency and simplifying infrastructure management. This allows us to focus on what really matters without worrying about ops and infra.

Everything you need for production

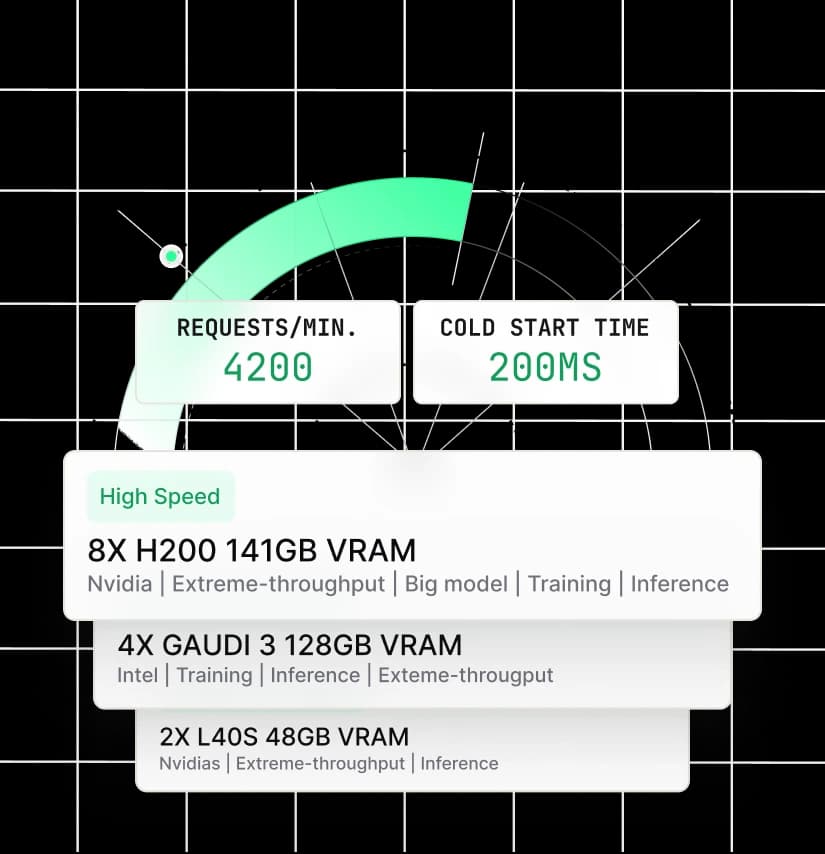

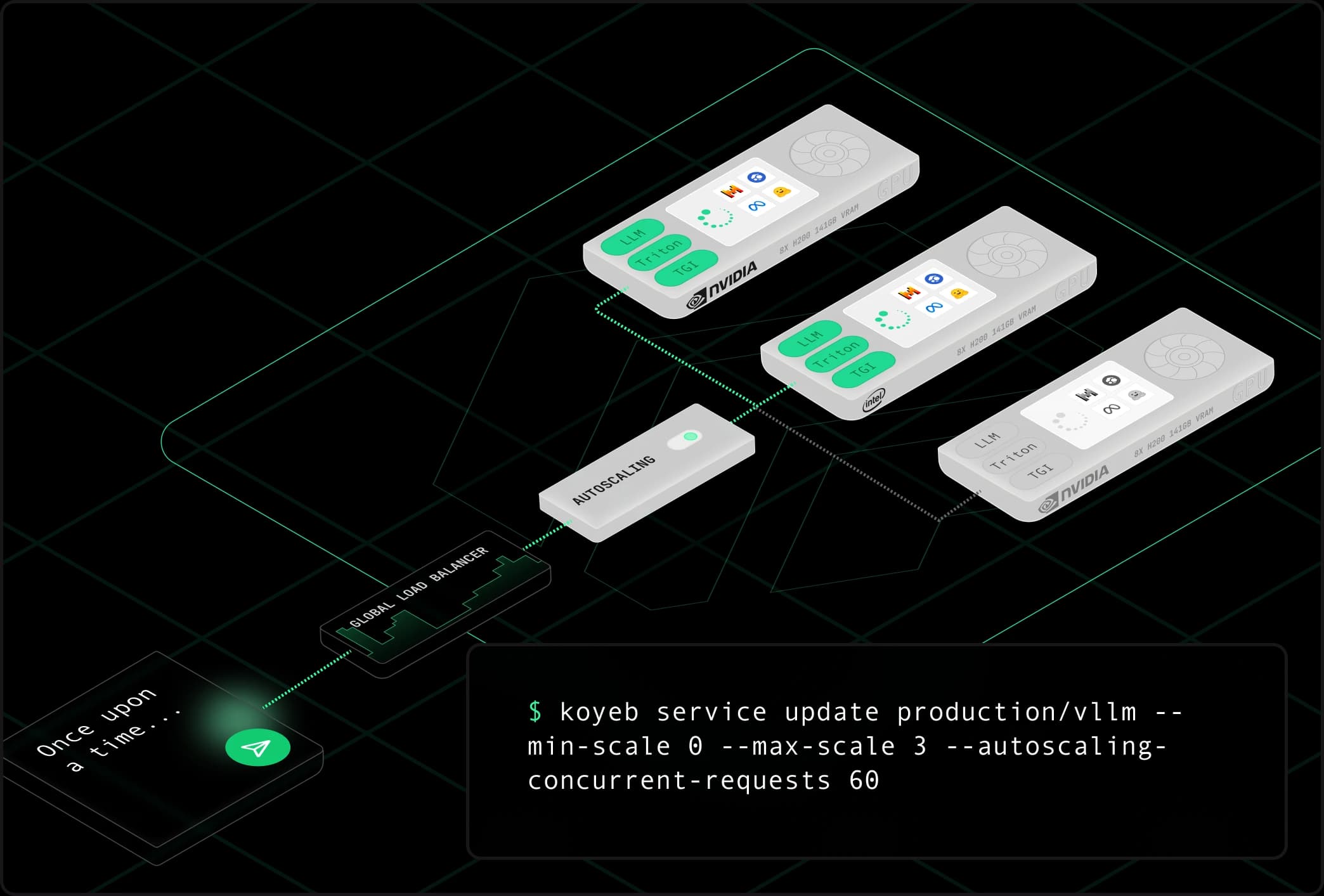

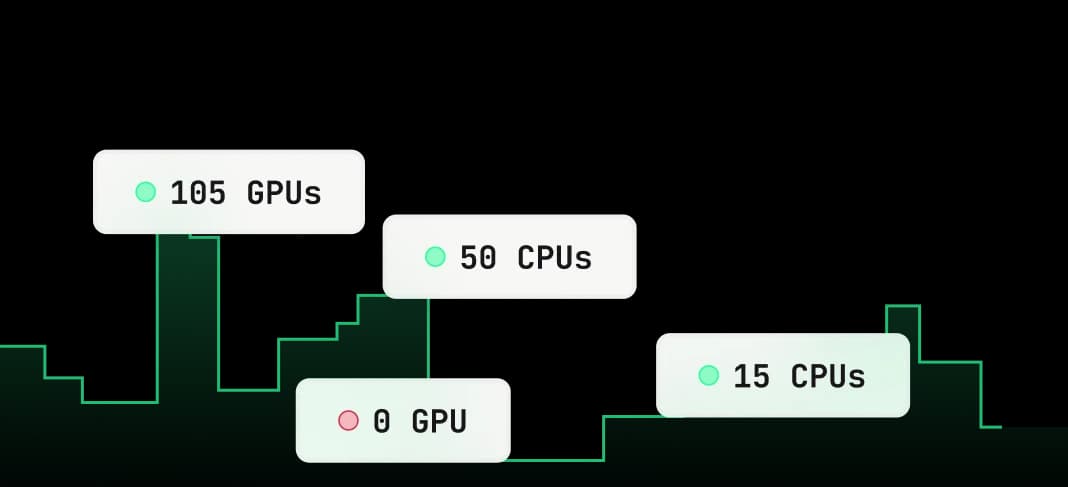

Access a wide range of optimized hardware for scale-out workloads, on demand, in seconds.

Deploy now

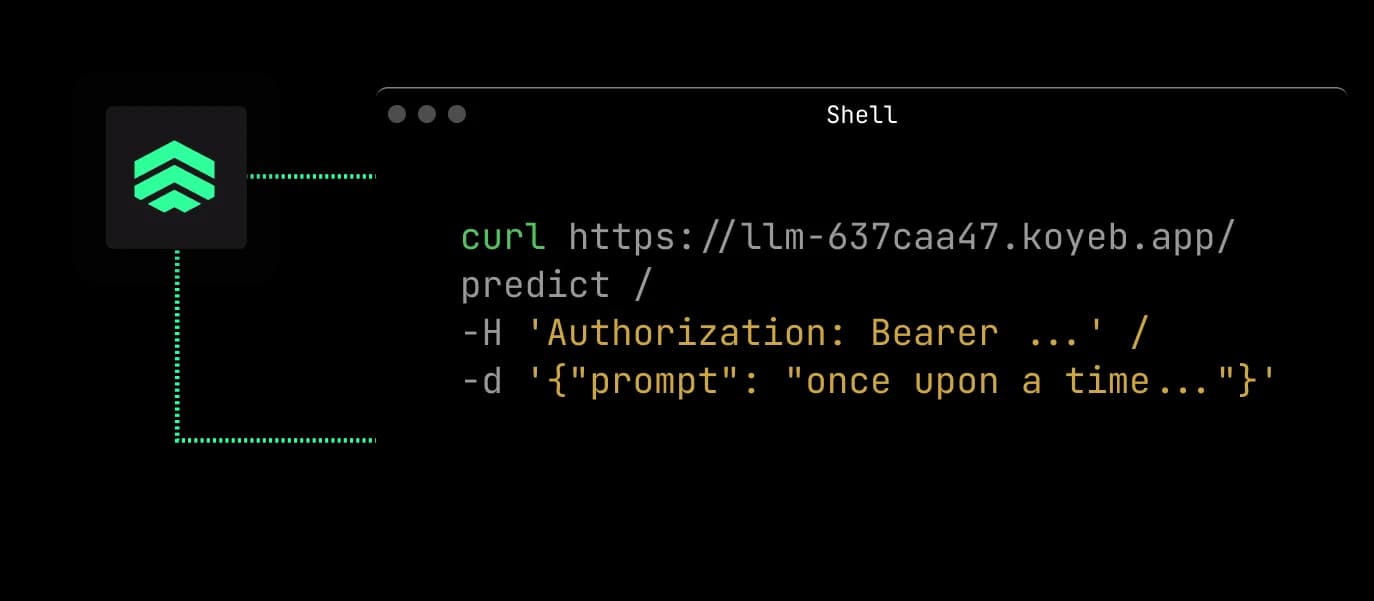

Just hit deploy to provision an API endpoint ready to handle requests in seconds. No waiting, no config.

Get Started

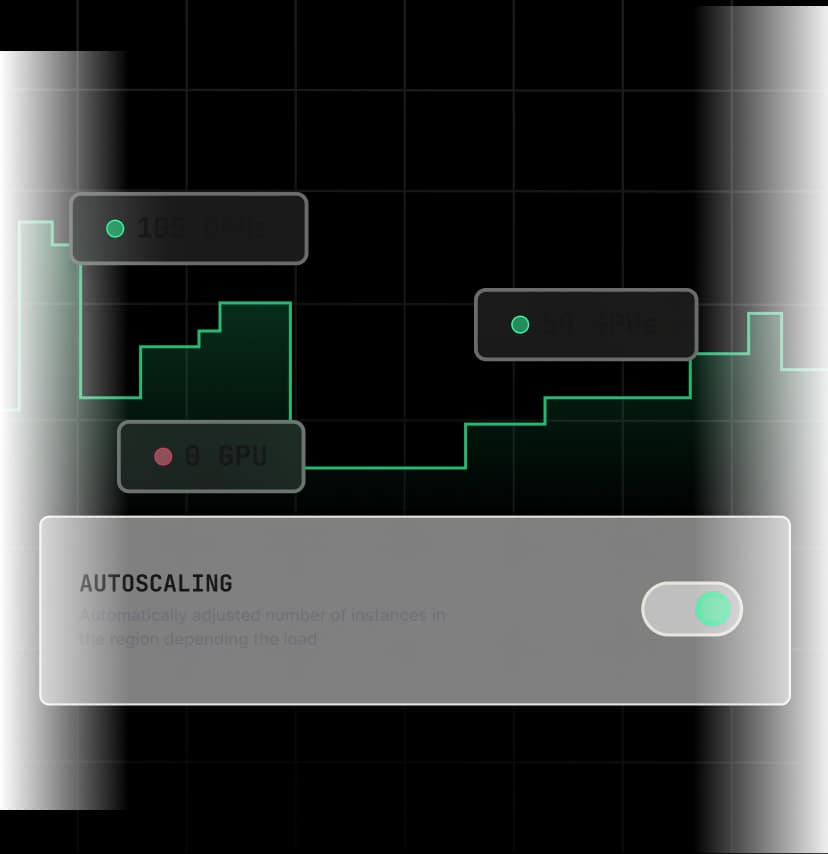

Get efficient autoscaling to adapt infrastructure to demand, with imperceptible cold start.

Learn more

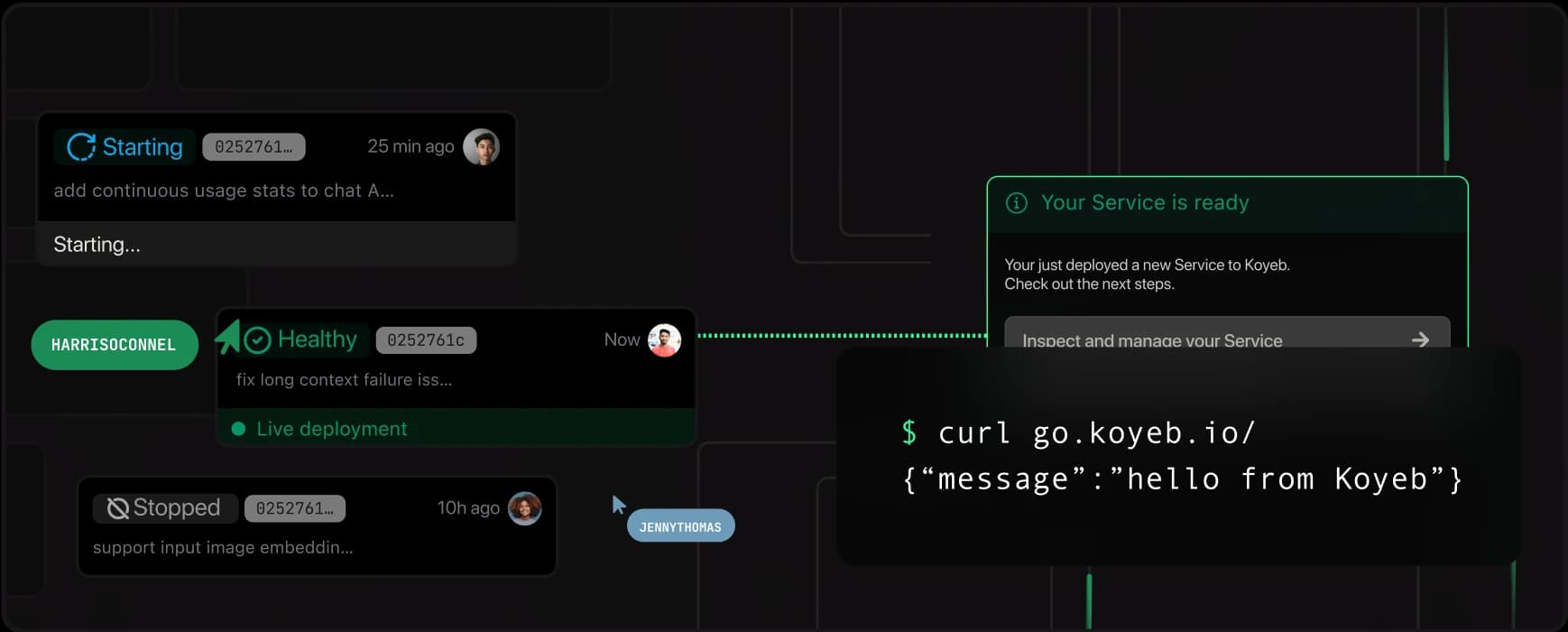

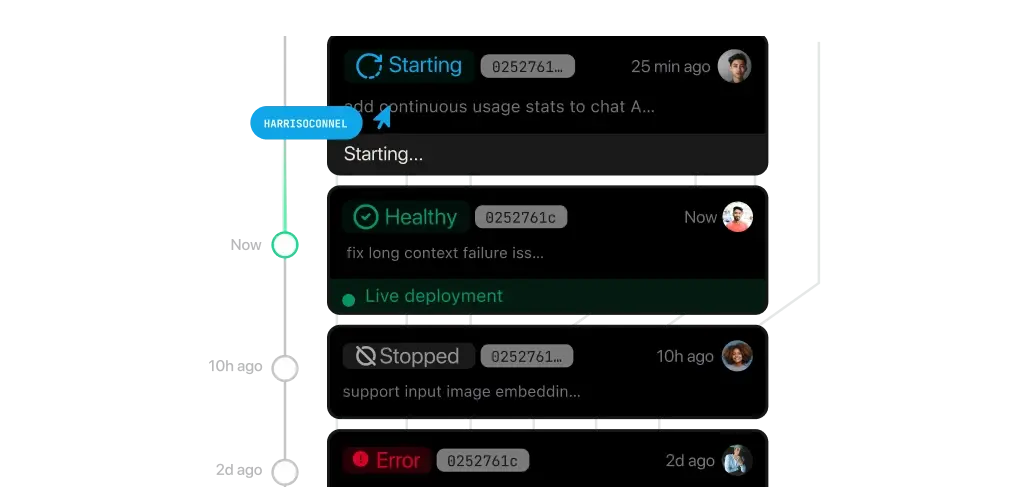

Enjoy built-in continuous deployment with automatic health checks to prevent bad deployment and ensure you’re always up and running.

View docs

Stream large or partial responses to end-users and accelerate your connections through a global edge network for instant feedback and responsive applications.

Get startedStore, index, and search embeddings with your data at scale using Koyeb's fully managed Serverless Postgres.

Store datasets, models, and fine-tune weights on blazing-fast NVME disks offering extremely high write and read throughput for exceptional performance.

Troubleshoot and investigate issues easily using real-time logs, or directly connect to your instances.

Last update from the team

- 50% Faster Time to Healthy for Deployments

- Improved Deployments Using Scale-to-Zero

- New Tutorial: Using Mistral Vibe with Koyeb Sandboxes for Secure Code Execution

- New Video: Run GitHub Copilot in Koyeb Sandboxes for Secure Code Execution

- Koyeb Enters Into A Definitive Agreement With Mistral

- Rolling Out Manual Scaling of Services

- Koyeb Sandboxes Now Accessible Using the Koyeb CLI

- Add Koyeb Knowledge to your Coding Agents Using Koyeb Skills