What is a service mesh?

A service mesh is a dedicated infrastructure layer that manages traffic, also known as communication, between services in applications composed of containerized microservices. It is a critical component in a microservices architecture, responsible for the secure, fast, and reliable communication between services.

This article answers a lot of the questions you may have about service meshes: What are they and how do they work? Who is using them and why? Where do they come from, what does their future hold, and more.

- What is a service mesh?

- The two main components of a service mesh

- Why use a service mesh?

- Since when are service meshes important?

- Top open source service mesh providers

- eBPF and a future without sidecars for the service mesh?

- Read more about service meshes

What is a service mesh?

A service mesh is a dedicated infrastructure layer that manages traffic, also known as communication, between services in applications composed of containerized microservices.

Breaking this definition down: “dedicated infrastructure layer” means this is a separate component that is decoupled from the application’s code, “manages communication between services” encompasses the variety of features and functionalities that keeps service to service communication fast, reliable, and secure, and “applications composed of containerized microservices” emphasizes the optimal use case for service meshes.

The two main components of a service mesh

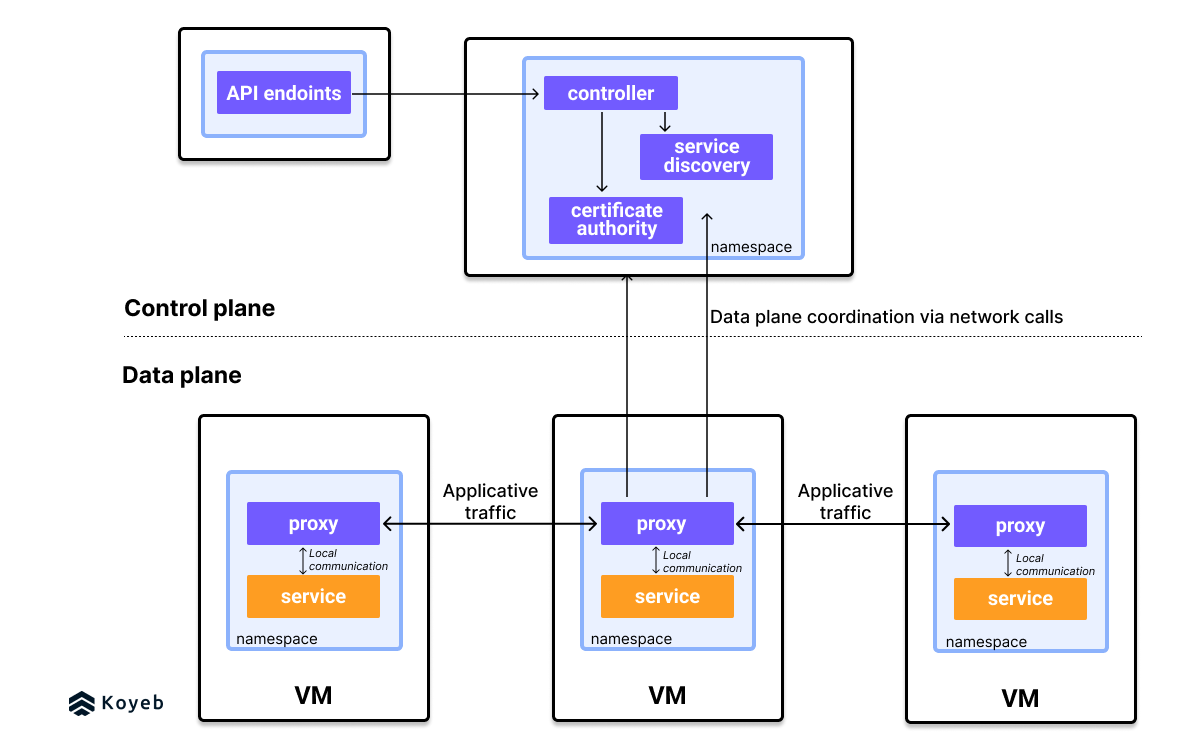

Looking at it from a high level, a service mesh contains two parts: a data plane and a control plane.

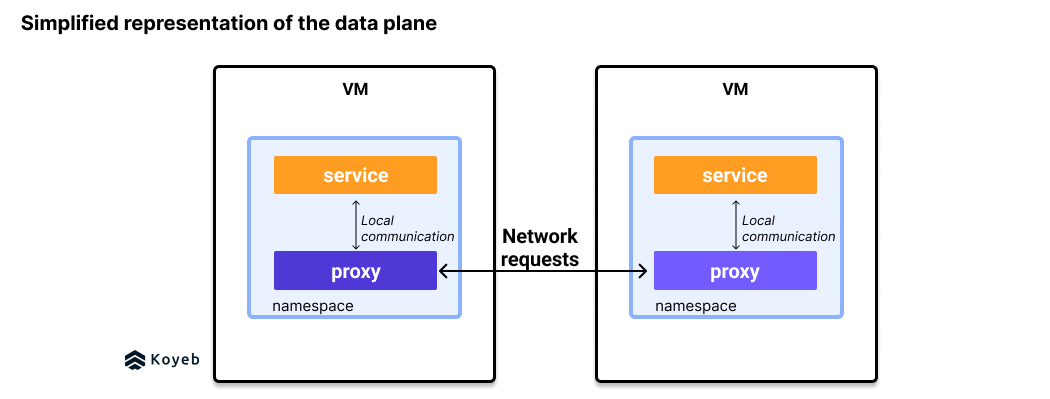

The data plane is a network of proxy sidecars attached to services

In a data plane, when one service wants to contact another service, the first service actually contacts its sidecar, which then contacts the sidecar of the second service. Instead of services making calls directly to other services, they make calls to their sidecar proxy. This pattern is typically described as the “sidecar model” because the proxy is stuck next to its service in the same namespace, like a “sidecar”.

The data plane is made up of userspace proxies that sit alongside the services of an application. These proxies are separate processes from the application process. Applications only communicate with their dedicated proxy. These proxies are the ones that perform calls to and from services. As such, they implement the functionalities of the service mesh, decoupled from the application's code.

The choice of proxy is an implementation detail, and different service meshes rely on different proxies. Envoy Proxy is widely used whereas Linkerd uses its own purpose-built proxy called Linkerd-proxy. A matrix below compares the proxies used by popular open source service meshes.

The control plane

The control plane is a set of components that lets you, the person implementing the service mesh, set and change the behavior of the data plane proxies via an API. For example, we could want to encrypt all communications between services. The control plane can be in charge of distributing certificates to all proxies.

It is a set of components that coordinates the behavior of the data plane. In this example schema, these components include a certificate authority and service discovery.

How is a service mesh different from an API gateway?

Service meshes manage communication between services inside an application whereas API gateways manage traffic from outside the application to services inside the application.

Kong dove into the differences in their article Service Mesh vs. API Gateway: What’s The Difference? and provided an example use case at the end to showcase how API gateways and service meshes work together.

Why use a service mesh?

When deploying microservices, two things become more important than ever: network management and observability. The service mesh is a powerful tool for handling both without implementing the functionality directly into the code of the application. This approach was actually the predecessor to the service mesh, more on that below.

Managing the traffic between these services becomes increasingly complicated as the number of services in an application grows. This is another reason for decoupling the code required to manage service-to-service communication from the services themselves.

In The Service Mesh: What every software engineer needs to know about the world’s most over-hyped technology, William Morgan, one of the creators of Linkerd, the first service mesh project, puts it this way, “The service mesh gives you features that are critical for running modern server-side software in a way that’s uniform across your stack and decoupled from application code.”

Critical features offered by a service mesh

To elaborate on the wide variety of features a service mesh provides, here is a short list of critical functions it performs to manage inter-service communication:

- Service discovery

- Load balancing

- TLS encryption

- Authentication and authorization

- Metrics aggregation, such as request throughput and response time

- Distributed tracing

- Rate limiting

- Routing and traffic management

- Traffic splitting

- Request retries

- Error handling

These features range from the necessary to the nice-to-have depending on the scale of your infrastructure. A service mesh provides them natively.

Service mesh is agnostic to how you develop

One of the advantages of building applications made up of microservices is being able to use different languages and frameworks. A service mesh abstracts network management by implementing functionalities between services, rather than relying on libraries that are framework and language-specific. Developers working across teams can use the framework or language they want for their services without having to worry about how these services will communicate with other services across the application.

A dedicated infrastructure layer

Since the service mesh is an abstracted layer of infrastructure, it decouples services from the network it runs on. Troubleshooting network problems becomes separated from troubleshooting the application’s code. This separation allows engineering teams to stay focused on developing the business logic relevant to their services and DevOps teams to focus on maintaining the reliability and availability of those services.

Since when are service meshes important?

Service mesh adoption has increased since it became easier to build and deploy microservices. This Atlassian article discusses the rise of microservices architecture and the value it brings compared to monoliths.

When breaking up a monolithic app into microservices, the communication between these services becomes vital to the health and performance of the application. Technically, you could incorporate the features to manage this traffic directly into your application. This is what Twitter, Google, and Netflix did with massive internal libraries like Finagle, Stubby, and Hysterix.

Except, not everyone had the resources or engineering capacity these tech giants had when they created these libraries. Plus, these custom libraries are not perfect solutions. They are coupled with their applications, which means they are language and framework specific and they need to be understood by the teams developing the services and constantly maintained.

The arrival of Docker and Kubernetes in the 2010s greatly reduced the pain and operating cost of deploying containerized microservices for engineering teams across the board. Docker revolutionized containerization, and containers give us a standard unit of software to package code and all its dependencies and have them run reliably across different environments. Kubernetes gives us a way to orchestrate these containers and deploy them to the internet.

With more people deploying microservices, there are more people who need to ensure the fast, reliable, and secure communication between those decoupled services. Fortunately, just as container orchestration simplified deploying microservices, it simplifies deploying and running a service mesh.

Top open source service mesh providers

Out of the number of service mesh solutions that exist, the most popular open source ones are: Linkerd, Istio, and Consul. Here at Koyeb, we are using Kuma.

| ServiceMesh | Proxies | Creators | Initial release date | According to StackShare.io, used by |

| Consul | Envoy | Open source project from HashiCorp | 2014 | Robinhood, Slack, LaunchDarkly |

| Linkerd | Linkerd-proxy, a Rust micro-proxy | Open source CNCF project stewarded by Buoyant | 2016 | Monzo, Amperity, Teachable |

| Istio | Envoy | Open source project from Google, Lyft and IBM | 2017 | Medium.com, Skyscanner, Lime |

| Kuma | Envoy | Open source project stewarded by Kong Inc. | 2021 | Koyeb |

Native service mesh when deploying on Koyeb

At Koyeb, we built a multi-region service mesh with Kuma and Envoy and wrote about it in Building a Multi-Region Service Mesh with Kuma/Envoy, Anycast BGP, and mTLS.

Our multi-region service mesh plays a pivotal role in routing requests for services hosted on Koyeb to the actual bare metal machines where those services are located. It is vital in providing a seamless experience on the network because it performs local load balancing, service discovery, and end-to-end encryption.

eBPF and a future without sidecars for the service mesh?

Remember earlier when we talked about the sidecar model for a service mesh's data plane? A new sidecarless model for the data plane is springing up in the ecosystem. There is also a lot of attention around eBPF and what role it can play in the larger picture of further improving service to service communication. We'll save this reflection for another blog post.