Service Mesh and Microservices: Improving Network Management and Observability

Whether you're transitioning away from a monolith or building a green-field app, opting for a microservice architecture brings many benefits as well as certain challenges.

These challenges include namely managing the network and maintaining observability in the microservice architecture. Enter the service mesh, a valuable component of modern cloud-native applications that handles inter-service communication and offers a solution to network management and microservice architecture visibility.

This post is a follow-up to our service discovery post, where we explained the technology and process behind locating services across a network. Here we'll look at what is a service mesh, why it's important, and how it works. Then we'll discuss when you should think about adding one to your app's architecture and how to do so.

With native service mesh and discovery, Koyeb enables developers to connect, secure, and observe the microservices in their applications with zero configuration. Koyeb is a next-generation developer-friendly serverless platform for hosting web services, apps, APIs, and more.

What is a Service Mesh?

A service mesh is a dedicated infrastructure layer that is responsible for the secure, quick, and reliable communication between services in containerized and microservice applications. This includes monitoring and controlling the traffic within a microservice architecture.

History and Driving Force Behind the Service Mesh

The origins of the service mesh lie with Linkerd, an open-source service mesh created by Buoyant. From a technical standpoint, the service mesh is a great way to introduce additional logic into a microservice architecture. There is however a more important driving force behind the creation and implementation of the service mesh.

In his extensive and insightful post The Service Mesh: What Every Software Engineer Needs to Know about the World's Most Over-Hyped Technology, William Morgan, one of the creators of Linkerd, explains, "The service mesh gives you features that are critical for running modern server-side software in a way that's uniform across your stack and decoupled from application code."

By decoupling the microservices from the network they run on, engineering teams responsible for the services do not have to spend time or energy managing or troubleshooting problems on the network. This responsibility remains separate from the business logic and is overall made simpler with a service mesh.

The Sidecar Model

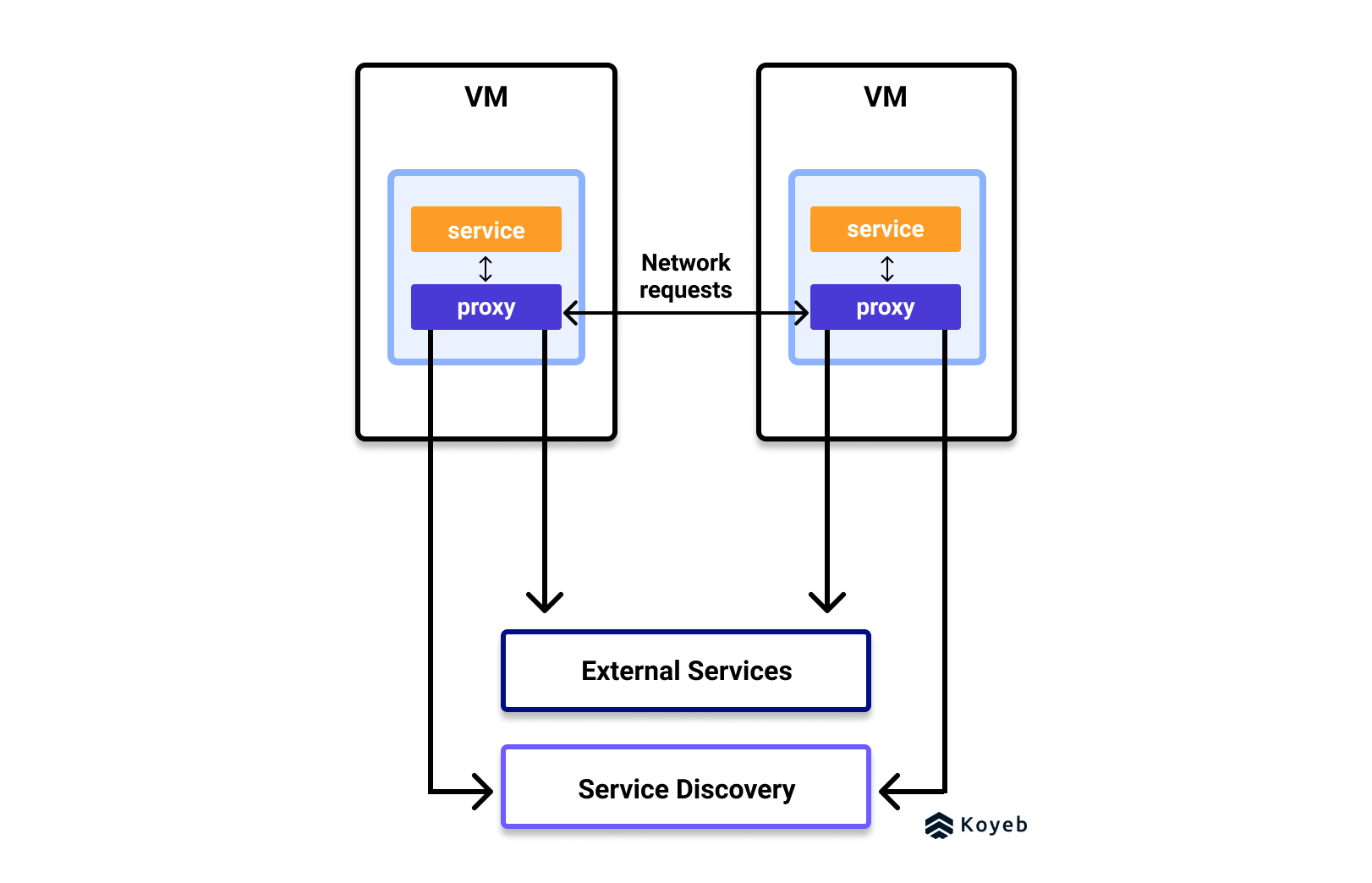

Service meshes are implemented into the application's architecture by placing a proxy instance next to each service instance. This proxy acts as a sidecar to the service, hence the name sidecar model.

A service mesh is composed of a data plane and a control plane:

- The data plane is made up of proxies. The proxies pass calls between the services to which they are attached. Examples of different proxies to be used in a service mesh are Envoy, linkerd2-proxy, and HAProxy.

- The control plane is a set of management processes that handle the behavior of the proxies.

The control plane provides an API that allows users to monitor and change the behavior of the data plane.

The proxies abstract the network management and inter-service communication. They can handle interservice communication, monitoring, and security-related matters. Communication happens locally between the service and the proxy. Then, that proxy can communicate with other proxies on the network as well as with services outside the network through network calls.

Important for Health Checks, Network Management, and Security

Service meshes provide vital services such as:

- Load balancing;

- Service discovery;

- Routing and traffic management;

- Encryption, authentication, and authorization;

- Observability and traceability;

- Rate limiting;

- Error handling;

- and monitoring capabilities.

Benefits of a Service Mesh

As listed above, there are a number of benefits that come from using a service mesh. In the big picture, these benefits enable engineering teams to perform vital health checks for services as well as manage and secure the network the services run on. Since the service mesh handles the heavy-lifting of network management, developers can concentrate their energy and time on developing the services that run on the service mesh.

When to Add a Service Mesh

Given the shifting trend towards cloud-native apps and microservice architectures, service meshes are becoming a vital infrastructure component for modern apps.

If you're building a service mesh from scratch, identifying the opportune time to add a service mesh to your microservice architecture requires recognizing your engineering team's capacity and the opportunity costs between waiting and starting to include a service mesh.

Some factors or trends that indicate you'll want to consider adding a service mesh to your application's architecture:

- Your microservices are written in different languages or rely on different frameworks,

- Your architecture relies on third-party code or needs to function between teams working off different foundations,

- Your teams are spending a lot of time trying to identify, localize, or understand a problem in your microservice architecture.

- Your application requires high levels of visibility for auditability, compliance, or security reasons.

Service mesh built-in to the Koyeb Serverless Platform

Microservice applications can consist of any number of services. One of the most challenging parts of building a microservice application is ensuring the communication between those services.

While crucial in optimizing a microservice architecture, service meshes are hard to develop and implement. Not to mention, your engineering teams' time is better spent improving the services unique to your business rather than managing the network they run on.

Keeping resilience capabilities independent from your business logic or operations saves precious time and is a more efficient use of resources. This is why Koyeb decided to provide a built-in service mesh feature into the Koyeb serverless platform.

Thanks to the native service mesh, developers can connect, secure, and observe microservices in their applications deployed on Koyeb.

Koyeb is a developer-friendly serverless platform that hosts web apps and services, Docker containers, APIs, event-driven functions, cron jobs, and more!

Sign up and deploy your next project or our demo application.

Additional Reading about Service Meshes and Discovery

- The Service Mesh: What Every Software Engineer Needs to Know about the World's Most Over-Hyped Technology by William Morgan

- Service Meshes in the Cloud Native World by Pavan Belagatti

- Kubernetes Service Mesh: A Comparison of Istio, Linkerd and Consul by Sachin Manpathak

- Service Discovery: Solving the Communication Challenge in Microservice Architectures by Koyeb