Building a Multi-Region Service Mesh with Kuma/Envoy, Anycast BGP, and mTLS

We're kind of crazy about providing the fastest way to deploy applications globally. As you might already know, we're building a serverless platform exactly for this purpose. We recently wrote about how the Koyeb Serverless Engine runs microVMs to host your Services but we skipped a big subject: Global Networking.

Global Networking is a big way of saying "How are my requests processed?". A short answer is: requests go through our edge network before reaching your services hosted on our core locations. But this doesn't say much, except that we have an edge network and core locations.

Today, we are going to take a peek behind the curtain and discover the technology behind the Koyeb Serverless Platform that transports end-user traffic from all around the globe to the right destination where your service is running. We explore the components that make up our internal architecture by following the journey of a request from an end-user, through our Global Edge Network, and to the application running in one of our Core locations. Let's jump in!

- Our goal: Seamless deployment of Services in Multiple Regions

- First Hop: the Edge, a combination of BGP, Global Load Balancing, caching, and TLS to increase performance and security

- Second Hop: the Core, mTLS, Ingress Gateways, and the Service Mesh

- Supporting TCP and UDP connections with Global Load-Balancing

Our goal: Seamless deployment of Services in Multiple Regions

To better understand why we built a global networking solution, you first need to understand that we're looking to enable seamless deployments of services across continents with all modern requirements completely built-in.

To make this a reality, the key requirements are:

- Multi-region services: We want you to be able to deploy services running in multiple regions located on different continents in 1-Click. You shouldn't have to replicate configuration and duplicate services to deploy in different regions

- Seamless inter- and intra-region networking: It should be seamless for services to communicate with services deployed in the same region and also with services deployed in another region.

- Global load balancing: Inbound traffic for services located in multiple regions should be redirected toward the nearest location.

- Native TLS: TLS is not an option anymore and manually dealing with TLS certifications is a waste of time.

- Edge caching: You shouldn't need to manually configure a CDN in front of your app, this should be built-in.

- Zero-config networking: All of this shouldn't require any configuration, you should benefit from secure private networking without any setup.

On our end, we want to be able to run in a Zero Trust network environment. We want to be able to run on any cloud service provider and we want all the traffic flowing between our baremetal machines to be fully encrypted.

First Hop: the Edge, a combination of BGP, global load balancing, caching, and TLS to increase performance and security

The journey begins with a user (or machine) request for a web service, API, or any app hosted on the Koyeb Serverless Platform. The first hop of this request is to our edge network.

Our current Global Edge Network is composed of 55 edge locations distributed across all continents. At each location sit proxy servers that are configured to process requests by either returning cached responses or routing traffic to the nearest core location where the service lives.

Using an edge network improves performances for end-users located all around the world. Without this edge network, all requests for services would have to make the full round trip to the service running in a server in a core location. The downsides to these roundtrips include longer loading times for users, more pressure on your application to handle the local traffic requests, and increased bandwidth costs.

Let's dive into how this works.

Reaching the Edge: Global Load Balancing with BGP Anycast

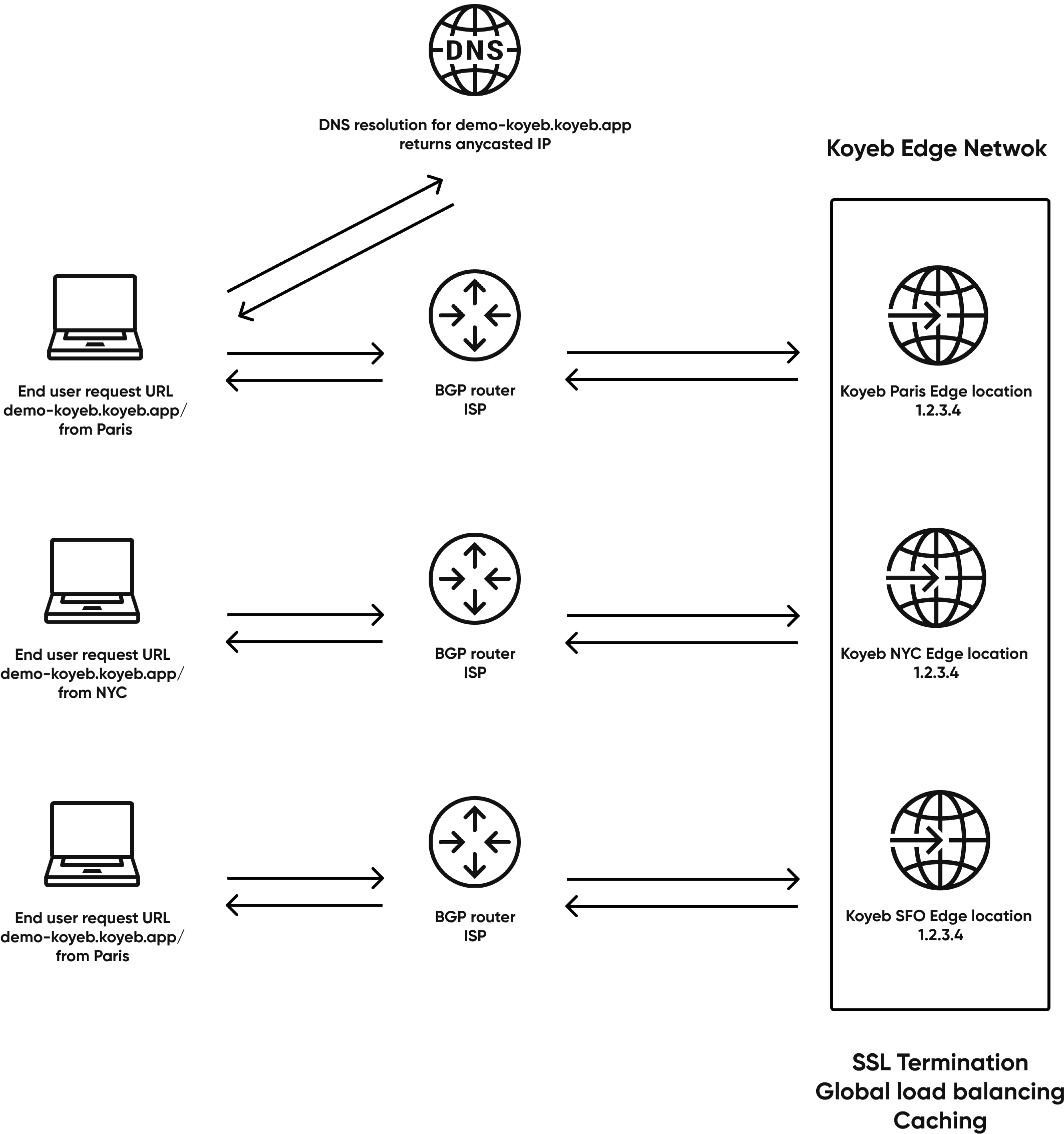

The request is originally performed using the domain name of the service and the DNS resolution will return an anycasted IP address.

The request will then reach our Global Edge Network using the anycasted IP address. Anycast is a routing and networking method that lets multiple servers announce the same IP addresses. Across the internet, we rely on anycast BGP (Border Gateway Protocol) to route requests to the geographically closest servers. These servers live on and make up the edge network.

If you're not familiar with BGP and internet routing, ISPs (Internet Service Providers), network operators, and cloud service providers rely on BGP to exchange routing information and determine the shortest path to an IP. As global network routing is not only influenced by technical paramaters but also by business and geopolitical subjects, this might not always be the shortest technical path.

To sum up, properly configured BGP Anycast provides a solution for:

- global georouting: the BGP protocol is used to determine the shortest path to reach the IP address across the internet

- redundancy: unresponsive physical servers and locations are automatically removed from the internet routing tables

- scaling and load-balancing: multiple servers can announce the same IP and traffic will be load-balanced across them

Without anycast, all requests would go to a single load-balancer in a single location to be processed.

High Performance at the Edge: TLS, HTTP/2, and Caching

The initial connection to the Edge is done using HTTP/2 and secured with the latest version of TLS. TLS serves two key purposes: native encryption of the traffic is crucial for security and ending TLS at the edge improves TTFB (Time To First Byte) speed.

Once a request reaches the closest server on the edge network, this edge server determines if it can return a cached response or if it must forward the request to the nearest core location to be processed:

- If the request was previously cached and the cache is still valid, the cached response is directly used to fulfill the request. In this case, the journey ends when the response is returned to the user. Ultimately, this would be the shortest journey a request could travel, and thus would be the fastest response it could receive.

- If the edge location determines it cannot return a cached response — for example, if the cached response is invalid or if this is the first request to your service— the journey of the request continues to the nearest core location hosting your service.

If you're not familiar with how, when, and why requests are cached at the edge, you can check our post about cache-control and CDNs.

Routing to the right Core Location

If a cached response cannot be returned, the request must travel from the edge network to the requested service to be processed. This service is hosted in containers, which live in microVMs that runs directly on our baremetal servers. These baremetal servers are located in our core locations.

To perform global traffic routing and find the nearest core location where the service is deployed, the edge network contacts our control plane. This result will be cached at the edge for subsequent requests.

Second Hop: the Core, mTLS, Ingress Gateways, and the Service Mesh

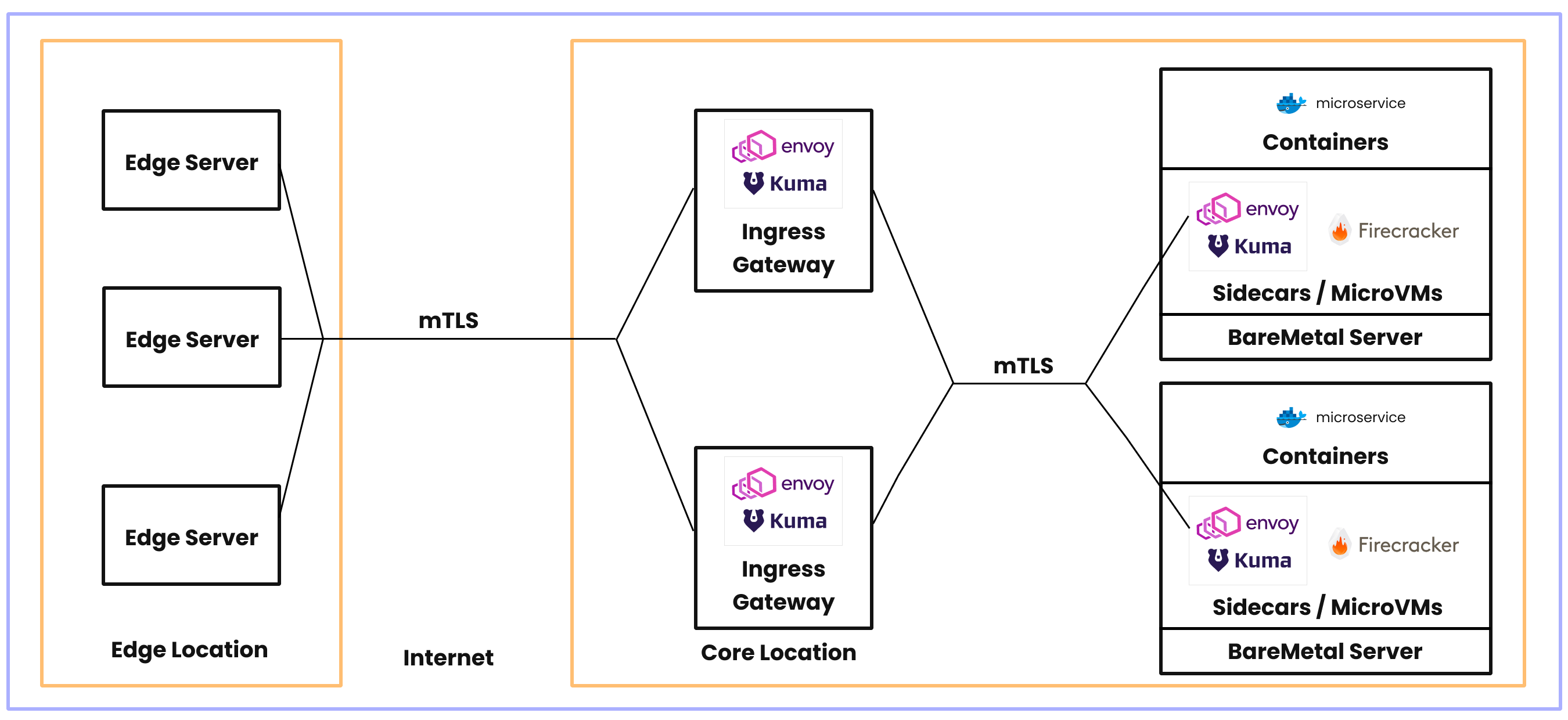

Now, the edge knows on which core location the service is located, but it doesn't know on which hypervisor (aka bare metal machine) the service is located. The traffic is going to be routed through our Service Mesh:

- first to what we call an Ingress Gateway located in the Core location, which will load-balance the traffic between the different microVMs hosting the service

- finally through a sidecar proxy which will decrypt the traffic before transmitting it locally to the right microVM where the service is running.

Let's dig in what is a Service Mesh and how it works on our Serverless Platform.

The Service Mesh: Kuma and Envoy

Our service mesh is critical in providing a seamless experience on the network: it provides key features including local load balancing, service discovery, and end-to-end encryption.

The service mesh is composed of a data plane and a control plane:

- The data plane is composed of proxy instances that are attached to service instances. These proxies pass network traffic between the services to which they are attached.

- The control plane is a set of management processes that handle the behavior of the proxies.

We use Kuma, an open-source control plane, to orchestrate our service mesh and use the sidecar model. Since Kuma packages Envoy, this is technically Envoy proxies which are running. Envoy is a high-performance distributed proxy technology designed for microservice architectures.

If you're not familiar with service mesh technologies and concepts, we wrote a dedicated post about it Service Mesh and Microservices: Improving Network Management and Observability.

Now that we have introduced the notion of Service Mesh, let's circle back to our request.

From the Ingress Gateway to the final Hop

When a request reaches the Ingress Gateway of a core location, the Ingress Gateway uses service discovery to locate an Envoy proxy instance running alongside an instance of the requested service. Then, the Ingress Gateway performs local load balancing to direct the incoming request to that proxy instance.

This proxy instance, or sidecar, is running alongside an instance of the requested service. This service instance consists of the code running in a container that exists inside a Firecracker microVM. Firecracker microVMs are lightweight isolated environments running directly on top of bare-metal servers.

The role of the sidecar is to simplify and secure communications to its attached service by decrypting incoming traffic, encrypt outbound traffic to other services, and provide service discovery capabilities. Since the sidecar and the Firecracker microVM share an isolated namespace on the bare-metal server, communication passes between them securely and efficiently.

You can learn more about our orchestration and virtualization stack with our post on The Koyeb Serverless Engine: from Kubernetes to Nomad, Firecracker, and Kuma and Firecracker MicroVMs: Lightweight Virtualization for Containers and Serverless Workloads.

Once the request is processed by the workload running in the container, the response returns via the path it took to find the container. It passes through the Ingress Gateway, which returns it to the same edge location, which in turn returns the response to the source of the request, the user.

Zero-Trust network: Securing the Service Mesh connections using mTLS

To secure the traffic between the edge and the core location but also inside of the core location, we use mTLS (Mutual TLS).

mTLS ensures that all connections are:

- coming from an authenticated server using a valid certificate. This protects the services from any unwanted direct access.

- encrypted when going over the internet and over internal networks.

mTLS is used to secure communications between:

- the edge and the Ingress Gateways

- the Ingress Gateways and the sidecars

- the sidecars during inter-service communications

Concretely, services deployed on the platform and running in multiple regions of the world get built-in, native, encryption and authentication of connections, for both client-to-service and inter-services communication. The platform completely abstracts the complexity of securing these connections for you.

Supporting TCP and UDP connections with Global Load-Balancing

Right now, our global network supports connecting to your services using HTTP and HTTPS connections. As the internet is not only about HTTP, we aim to extend the platform capabilities by providing support for direct TCP and UDP.

This will involve some more BGP and some additions to our architecture, but that's for another post!

Enjoy powerful networking seamlessly with the Koyeb Serverless Platform

We provide a secure, powerful, and developer-friendly serverless platform. Offering this serverless experience entails providing seamless global networking to enable deployment across continents.

Two options to go from here:

- you want to discover the Koyeb serverless experience, you can sign up today.

- you want to work on a global networking stack and help build a serverless cloud service provider, we're hiring!

By the way, you can use Koyeb to host web apps and services, Docker containers, APIs, event-driven functions, cron jobs, and more. The platform has built-in Docker container deployment and also provides git-driven continuous deployment!

Don't forget to join us over on our community platform!