Enabling gRPC and HTTP/2 support at the edge with Kuma and Envoy

Our thing is to let you deploy your apps globally in less than 5 minutes with high-end performance. Not only does this require us to be meticulous about everything composing our infrastructure layer, but also we have to support high-level protocols like WebSockets, HTTP/2, and gRPC.

There are two major things in the infrastructure impacting performance: hardware and network. On the hardware side, we deploy all apps inside microVMs on top of high-end bare metal servers around the world. On the network side, we provide edge acceleration and a global load-balancing layer to route to the nearest region.

Another component driving performance is protocols. Protocols like HTTP/2 and gRPC reduce latency and enable a number of new applications. Today, we will explore how we added end-to-end HTTP/2 and gRPC support on the platform.

- HTTP/2 and gRPC: performance benefits and use-cases

- Enabling end-to-end HTTP/2 and gRPC

- Negotiating the protocol between the edge and our global load balancer

- HTTP/2 and gRPC at the edge and inside the service mesh

- What about HTTP3?

HTTP/2 and gRPC: performance benefits and use-cases

As a serverless platform, we need to support all protocols used by APIs and full-stack apps to communicate with the outside world and between each other. It's hard to run a state-of-the-art micro-service architecture without gRPC, right? If you're more into tRPC, don't worry: it's also supported!

We previously supported HTTP/2 to the edge to improve performance but not end-to-end, meaning your request was converted to HTTP/1 at the edge. While this improved performance, some use cases didn't work, like gRPC which runs on top of HTTP/2.

HTTP/2 benefits

As a refresher, here is why HTTP/2 matters and what it brings compared to the good old HTTP/1.1:

- Multiplexing: Enables sending multiple requests over a single connection. This decreases the number of connections made, ultimately reducing latency and improving response times.

- Prioritization: Allows clients to specify the order in which resources are loaded. Critical resources can be loaded first to improve performance and user experience.

- Header compression: Frequent header values are encoded to reduce the size of header fields, which reduces the amount of data needed to be sent.

- Server push: Allows servers to send data to clients before explicitly requested.

- Bi-directional streaming: Enables real-time communication.

HTTP/2 was released in 2015 but is still not automatic everywhere as we will see later.

gRPC benefits

Then, there is gRPC.

Here is the brief: gRPC is a high-performance remote procedure call framework that uses HTTP/2 as its underlying protocol. It also uses a binary serialization format called protobuf (Protocol Buffers) to transport data. Since these messages are smaller than JSON or XML equivalents, they are more efficiently transmitted across networks and processed, which reduces latency and improves application performance.

Voilà, you're up to date, let's dive into how we added support for end-to-end gRPC and HTTP/2!

Enabling end-to-end HTTP/2 and gRPC

We are currently using an Envoy proxy orchestrated by Kuma, which we call GLB, in combination with Cloudflare edge network to load-balance traffic globally. Together, they route traffic across all of our regions based on the user's location.

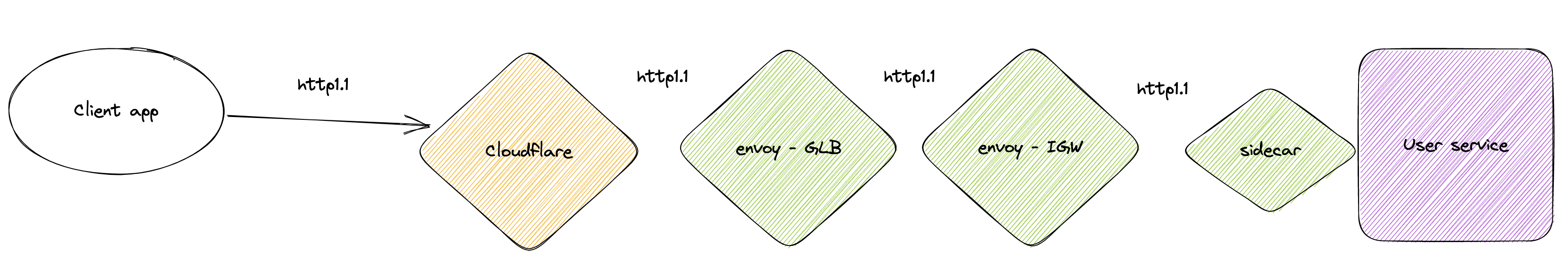

Here is a super simplified schema of our architecture when a client app sends an HTTP/1.1 request to a service running on our platform.

The request goes through:

- Clouflare edge location

- From there it travels to our global load balancer (GLB)

- The GLB routes it to the ingress gateway (IGW) in the region where the service is located.

- The IGW sends the request to the sidecar of the requested service.

- The sidecar passes it to the service.

All of this using HTTP/1.1 and without ever translating protocols.

Curious about the role of all of these components? We wrote about it in Building a Multi-Region Service Mesh with Kuma/ Envoy, Anycast BGP, and mTLS.

Understanding what happens behind the scenes of Cloudflare's dashboard for network options

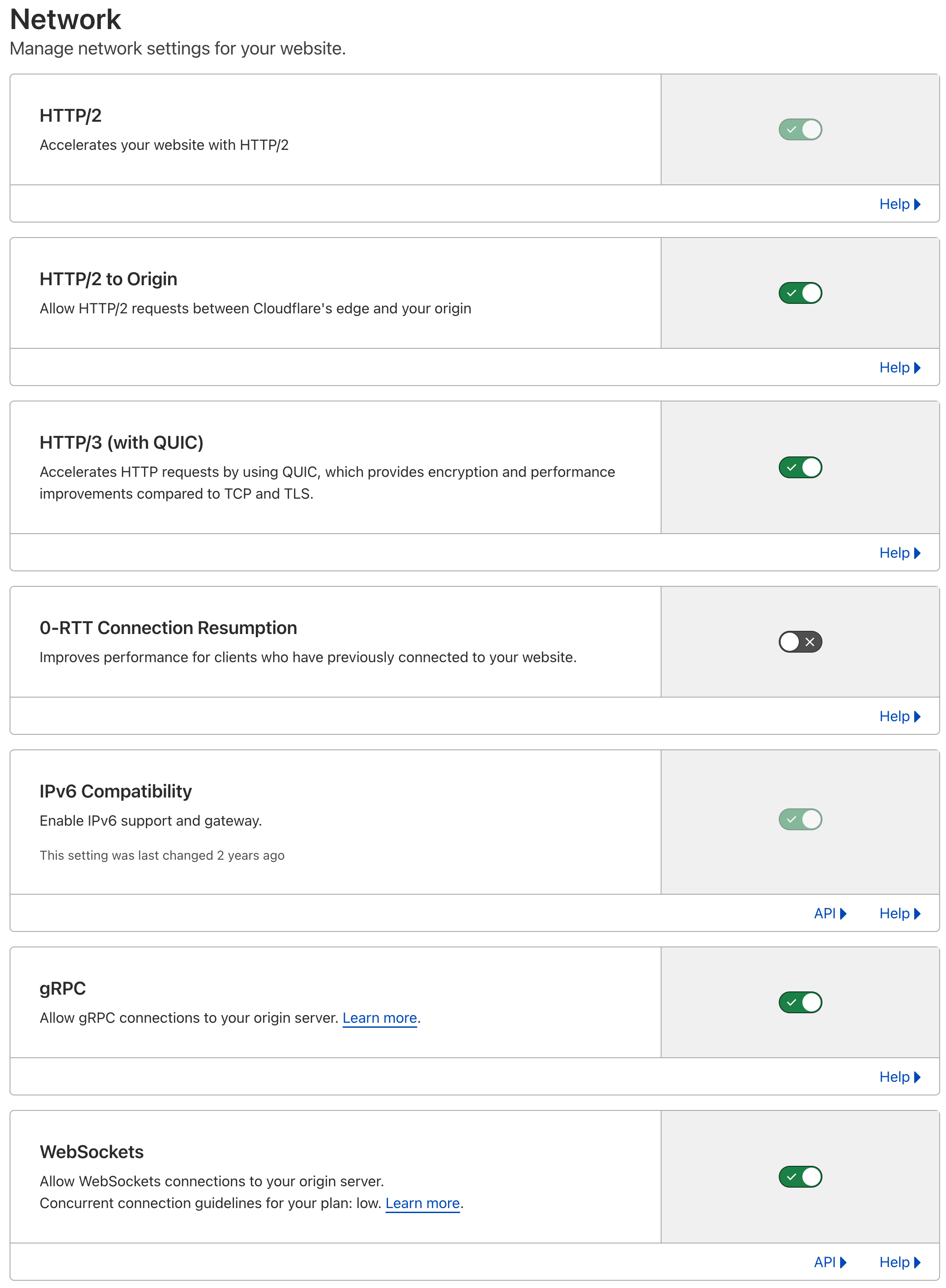

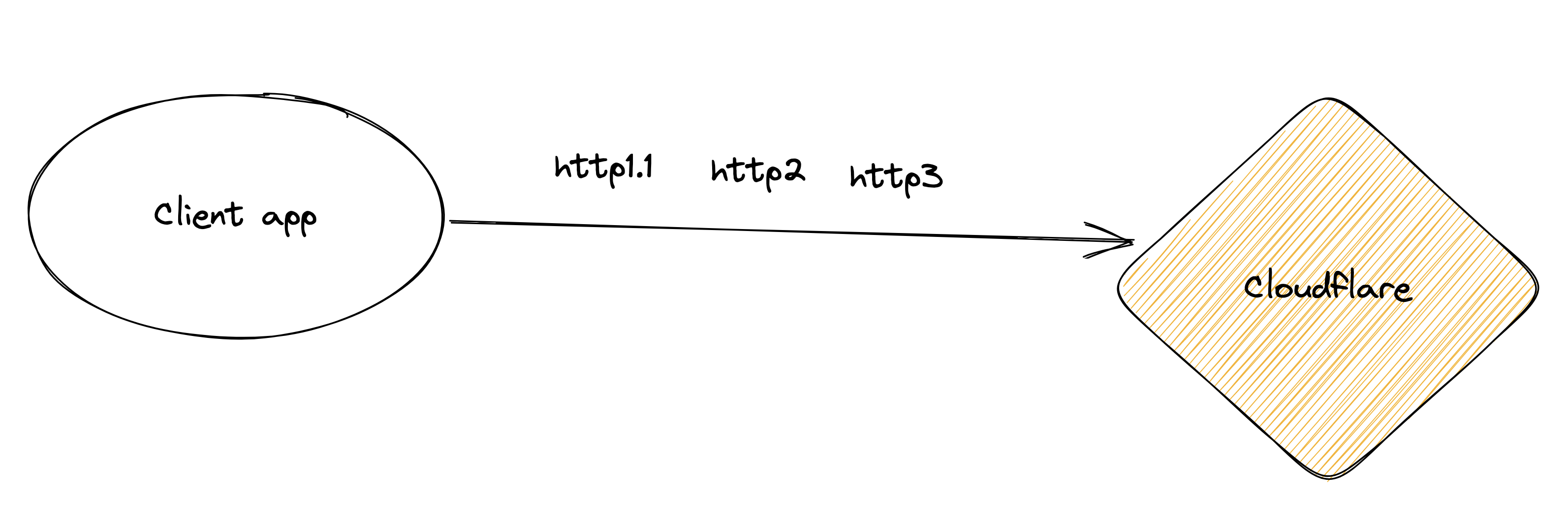

Cloudflare offers a large variety of features and support for major protocols HTTP/1.x, HTTP/2, HTTP/3, gRPC and WebSocket. The dashboard allows you to manage the various network options by directly clicking on the buttons, but what’s happening behind the scene might not be what you are expecting.

The theory looks like this: you want to support HTTP/2, HTTP/3, gRPC and Websockets? No problem, just click on the right toggle and it will instantly enable support for the selected protocol.

Depending on your configuration and your browser, you will most likely no longer use HTTP/1 to talk to your service. The negotiation of the protocol between In our case, the origin server is our global load balancer. The browser and the web server are well explained in Cloudflare's documentation:

A browser and web server automatically negotiate the highest protocol available. Thus, HTTP/3 takes precedence over HTTP/2.

To determine the protocol used for your connection, enter example.com/cdn-cgi/trace from a web browser or client and replace example.com with your domain name. Several lines of data are returned. If http=h2 appears in the results, the connection occurred over HTTP/2. Other possible values are http=http2+quic/99 for HTTP/3, and http=http/1.x for **HTTP/1.x.

This explains how protocols are determined between users and the first web server, in our case the edge network. But what about the traffic to your service running on the platforms? How is the protocol selected to talk to the origin server, and then to your service?

Negotiating the protocol between the edge servers and our global load balancer

When using an edge network, a CDN, or any kind of proxy in front of your application, your application is referred to as the origin.

When any proxy communicates with the origin, a negotiation happens to choose the protocol to use when making a request. The protocol negotiation is not based on the user-requested protocol, but is based on the settings of the proxy and the protocol supported by the origin.

In our case, the origin server is our global load balancer.

Advertising HTTP/2 support through APLN on our global load balancer

HTTP/2 traffic will only be received if you enable the ALPN advertisement on your origin. ALPN, or application-level protocol negotiation, is a TLS mechanism that allows the application layer to establish which protocol will be used. Here is the documentation for Envoy:

alpn_protocols

(repeated string) Supplies the list of ALPN protocols that the listener should expose. In practice, this is likely to be set to one of two values (see the codec_type parameter in the HTTP connection manager for more information):

- “h2,http/1.1” If the listener is going to support both HTTP/2 and HTTP/1.1.

- “http/1.1” If the listener is only going to support HTTP/1.1.

There is no default for this parameter. If empty, Envoy will not expose ALPN.

Let's add h2,http/1.1 et voilà! We've configured ALPN and are announcing that we support both HTTP/2 and HTTP/1.1.

Easy, no? Yes, but that's not all.

Enabling HTTP/2 to origin

Now that ALPN is set up, we should simply need to toggle the "HTTP/2 to origin" to on the dashboard, right? True, but there is a catch. Let's see what happens when we switch this toggle.

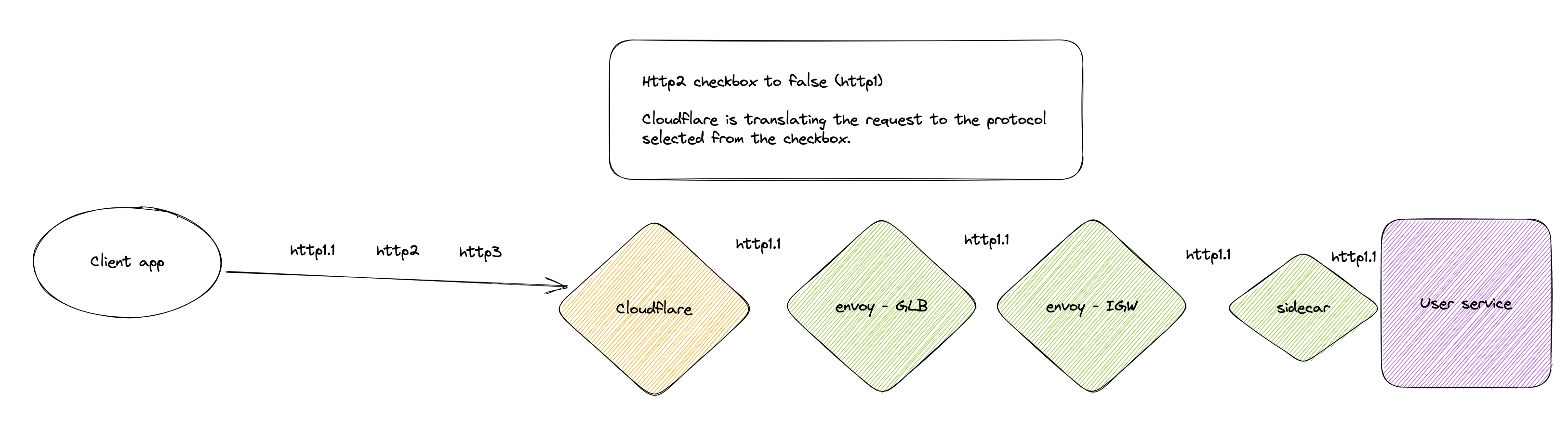

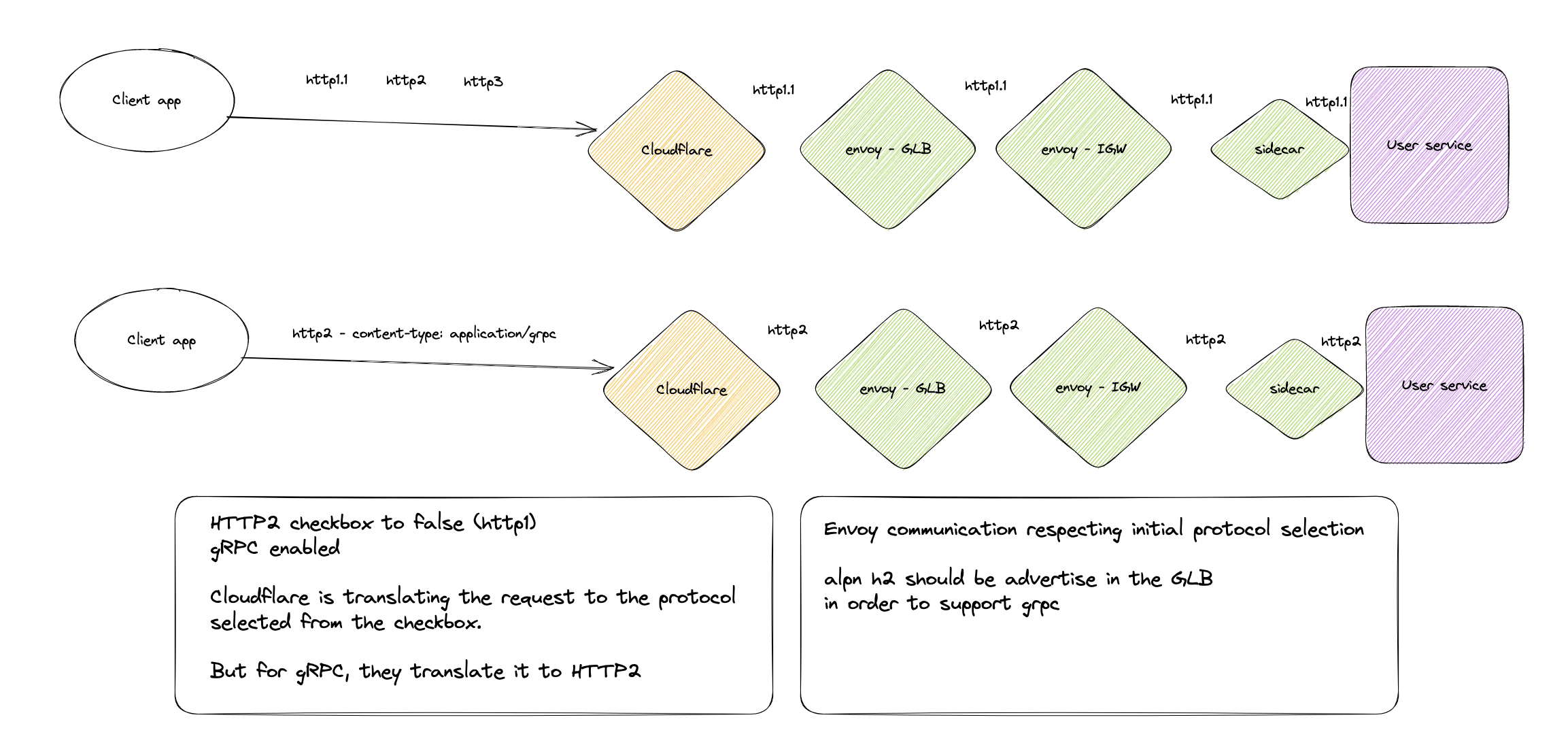

Toggle off: full HTTP/1.x

If you disable HTTP/2 to origin at Cloudflare's level, you will only receive requests ‘translated’ to HTTP/1.x to your origin. Here is what that would look like applied to our architecture:

With HTTP/2 disabled to origin, HTTP/2 requests are translated from Cloudflare to our origin server.

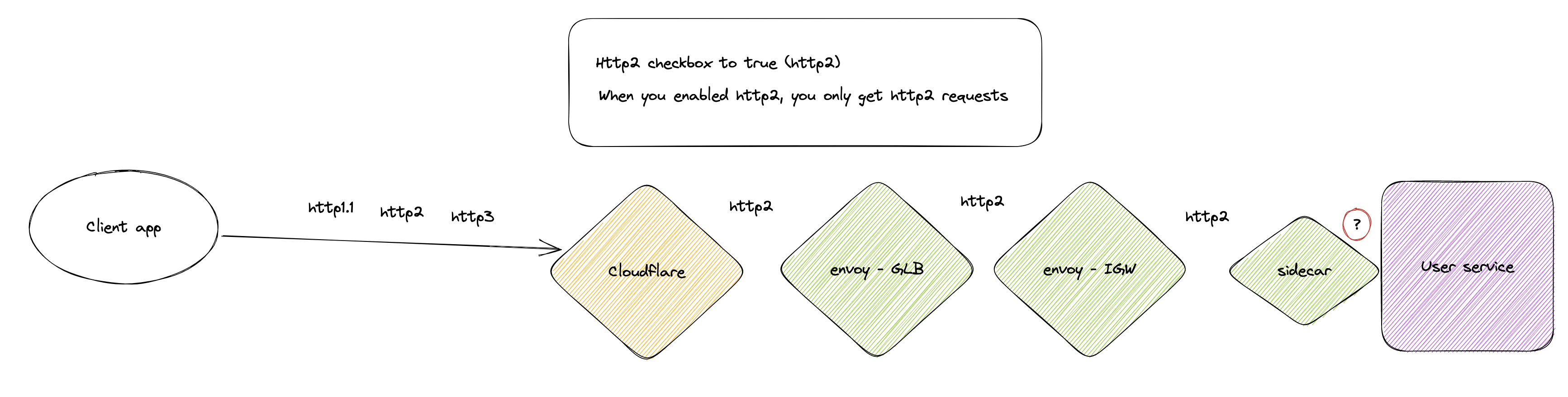

Toggle on: full HTTP/2

On the other hand, if you enable HTTP/2, you will only receive requests in HTTP/2. Both HTTP/1 and HTTP/3 will be translated. Here is a quick schema of what our architecture looks like once we enable HTTP/2 support to origin:

With the HTTP/2 toggle enabled, all requests will be translated to HTTP/2. This explains how we added HTTP/2 support, but there comes the catch... what if an application running on our platform does not use HTTP/2 and needs to use HTTP/1.1? This breaks.

The specifics of gRPC

As described in the Cloudflare gRPC documentation, there are several requirements for any backend that wants to support gRPC, including:

- HTTP/2 must be advertised over ALPN.

- Use application/grpc or application/grpc+<message type (for example: application/grpc+proto) for the Content-Type header of gRPC requests.

If your origin is set up with HTTP/1 and you're not following the necessary requirements and ALPN advertisement, it will lead to a protocol mismatch in your service due to a translated HTTP/1 query made to the origin.

That being said, you can enable gRPC when HTTP/2 to origin is disabled. Yes...

With Envoy communication respecting the initial protocol selection and HTTP/2 advertised through ALPN, you will get HTTP/2 traffic only for gRPC. You will get HTTP/1.x for all other kinds of traffic, including if the user-originated traffic is HTTP/2.

This is an interesting behavior but does not allow support for end-to-end HTTP/2 outside of the gRPC case.

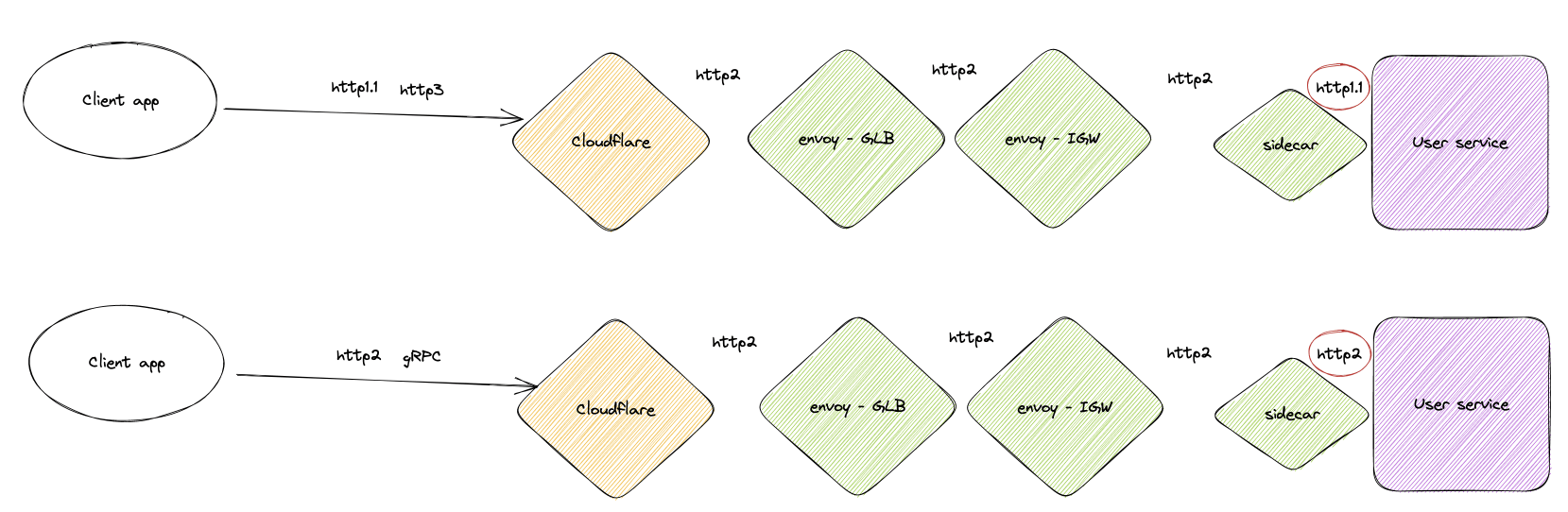

Supporting both HTTP/1.x and HTTP/2

To support both HTTP/1.x and HTTP/2, we added a protocol selector to the platform, so users running their services on the platform can indicate to us (specifically to the Envoy sidecar running alongside their service) which protocol to use to query that service.

HTTP/2 and gRPC at the edge and inside the service mesh

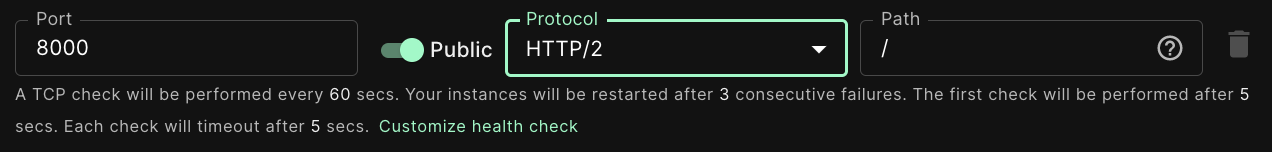

We have added a protocol selector to our control panel and CLI to support applications running on our platform that require HTTP/2 and gRPC for their public traffic. This feature enables you to indicate the type of traffic your service expects, ensuring seamless communication between your service and its requests.

When you use the protocol selector, you are letting the Envoy sidecar know which protocol it should use to reach your service:

- For services expecting HTTP/1 traffic, use the default HTTP protocol.

- For services using HTTP/2 and gRPC, select HTTP/2 in the protocol selector.

If needed, the Envoy sidecar translates the protocol to make the request in your specified protocol.

As for supporting HTTP/2 and gRPC inside the mesh, this is automatic thanks to Envoy. There is no L7 processing, and you are the only one that controls the different protocols used between your services. The request is encapsulated only in mTLS and not translated to any other protocol, you can do raw TCP, HTTP, HTTP2 and any other protocols over TCP.

What about HTTP/3?

HTTP/3 to origin is not currently supported by Cloudflare. Don't worry you will be the first to know when it’s available and implemented on the platform. You can vote for this feature and track its progress on our feedback request platform.

Enjoy native support of HTTP/2 and gRRPC

You know everything, and if you don't want to deal with the complexity of dealing with ALPN or building this kind of infrastructure, you can simply deploy on Koyeb!

Ready to give the platform a try? Sign up and receive $5 per month of free credit. This lets you run two nano services and use up to 512MB of RAM each month. 😉

Interested in working on these types of technical challenges? We're hiring! Check out our open positions on our careers page.

Want to stay in touch? Join us in the friendliest serverless community or tweet us. We'd love to hear from you!